Artificial Intelligence (AI) agents are the foundation of many intelligent systems which helps them to understand their environment, make decisions and take actions to achieve specific goals. These agents vary in complexity from simple reflex-based systems to advanced models that learn and adapt over time. Let's see different types of AI agents and their unique characteristics.

1. Simple Reflex Agents

Simple Reflex Agent Working

Simple Reflex Agent WorkingSimple reflex agents act solely on the current percept using predefined condition–action rules, without storing or considering any history. They are fast and easy to implement, making them suitable for fully observable, stable environments with clear and simple rules. However, they tend to fail in dynamic or partially observable situations because they lack memory and deeper reasoning capabilities.

Key Characteristics:

- Reactive: These agents respond immediately to inputs without consideration for prior events or predicting future outcomes.

- Limited Scope: They excel in predictable environments where tasks are straightforward and the relationships between actions and results are well understood.

- Quick Response: Since decisions are made based only on immediate input, it can react without delay.

- No Learning: These agents cannot improve or change their behavior based on past experiences.

When to Use: They are ideal in controlled, well-defined environments such as basic automation like home automation systems or real-time reactive systems like sensors or switches.

Example: Traffic light control systems that change signals based on fixed timing.

2. Model-Based Reflex Agents

Model-Based Reflex Agent Working

Model-Based Reflex Agent WorkingModel-based reflex agents enhance the simple reflex approach by maintaining an internal state or model of the world, that tracks aspects of the environment not directly observable at each moment. This enables them to deal with partial observability and dynamic changes more effectively, although their decisions are still largely reactive and dependent on the accuracy of the model they maintain.

Key Characteristics:

- Internal State: By maintaining an internal model of the environment, these agents can handle scenarios where some aspects are not directly observable thus it provides more flexible decision-making.

- Adaptive: They update their internal model based on new information which allows them to adapt to changes in the environment.

- Better Decision-Making: The ability to refer to the internal model helps agents make more informed decisions which reduces the risk of making impulsive or suboptimal choices.

- Increased Complexity: Maintaining an internal model increases computational demands which requires more memory and processing power to track changes in the environment.

When to Use: They are beneficial in situations where the environment is dynamic and not all elements can be directly observed at once. Autonomous driving, robotics and surveillance systems are good examples.

Example: Robot vacuum cleaners that map rooms and tracks cleaned areas.

3. Goal-Based Agents

Goal-Based Agents Working

Goal-Based Agents WorkingGoal-based agents select actions by considering future states relative to explicit goals. They are capable of planning sequences of actions to reach these goals rather than just reacting to the current state which enables more flexible and intelligent problem-solving. However, they require well-defined goals and effective planning algorithms to perform well in complex domains.

Key Characteristics:

- Goal-Oriented: They have explicit goals and make decisions based on how well their actions align with these objectives.

- Planning and Search: They often use planning algorithms that explore multiple possible actions to find the most effective sequence of steps that lead to their goal.

- Flexible: If conditions change or new information arises, it can re-plan and adjust their strategies to stay on track toward their objective.

- Future-Oriented: Unlike reflex agents,they think ahead and predict future outcomes to find the best course of action.

When to Use: They are important in applications that require strategic decision-making and planning such as robotics (pathfinding), project management (task scheduling) and AI in games (character decision-making).

Example: Logistics routing agents that find optimal delivery routes based on factors like distance and time. They continuously adjust to reach the most efficient route.

4. Utility-Based Agents

Utility-Based Agent Working

Utility-Based Agent WorkingUtility-based agents extend goal-based reasoning by considering not only whether a goal is met but also how valuable or desirable a particular outcome is. They use a utility function to quantify preferences and make trade-offs between competing objectives, enabling nuanced decision-making in uncertain or resource-limited situations. Designing an appropriate utility function is crucial for their effectiveness.

Key Characteristics:

- Multi-Criteria Decision Making: These agents fin multiple factors like cost, benefits, risk, time, etc to find the best possible course of action.

- Trade-Offs: They can make decisions by balancing competing goals and preferences often finding the best "compromise."

- Subjectivity: They are customizable to reflect subjective preferences or goals, making them more adjustable to individual or organizational needs.

- Increased Complexity: Finding utility functions for different factors can be computationally intensive and complex.

When to Use: They are ideal for tasks where multiple criteria need to be evaluated simultaneously such as financial planning, resource management or personal recommendation systems.

Example: Financial portfolio management agents that evaluate investments based on factors like risk, return and diversification operate by choosing options that provide the most value.

5. Learning Agents

Learning Agent Working

Learning Agent WorkingLearning agents improve their performance over time by learning from experience and updating their internal models, strategies or policies. They can adapt to changes in the environment and often outperform static agents in dynamic contexts. Learning may involve supervised, unsupervised or reinforcement learning techniques and these agents typically contain both a performance element (for acting) and a learning element (for improving future actions).

Key Characteristics:

- Adaptive Learning: It improve their decision-making through continuous feedback from their actions.

- Exploration vs. Exploitation: These agents balance exploring new actions that may lead to better outcomes with exploiting known successful strategies.

- Flexibility: They can adapt to a wide variety of tasks or environments by modifying their behavior based on new data.

- Generalization: It can apply lessons learned in one context to new, similar situations enhancing their versatility.

When to Use: They are well-suited for dynamic environments that change over time such as recommendation systems, fraud detection and personalized healthcare management.

Example: Customer service chatbots can improve response accuracy over time by learning from previous interactions and adapting to user needs.

6. Multi-Agent Systems (MAS)

Multi-Agent System Working

Multi-Agent System WorkingMulti-agent systems operate in environments shared with other agents, either cooperating or competing to achieve individual or group goals. These systems are decentralized, often requiring communication, negotiation or coordination protocols. They are well-suited to distributed problem solving but can be complex to design due to emergent and unpredictable behaviors. Types of multi-agent systems:

- Cooperative MAS: Agents work together toward shared objectives.

- Competitive MAS: Agents pursue individual goals that may conflict.

- Mixed MAS: Agents cooperate in some scenarios and compete in others.

Key Characteristics:

- Autonomous Agents: Each agent acts on its own based on its goals and knowledge.

- Interactions: Agents communicate, cooperate or compete to achieve individual or shared objectives.

- Distributed Problem Solving: Agents work together to solve complex problems more efficiently than they could alone.

- Decentralization: No central control, agents make decisions independently.

When to Use: They are ideal for decentralized environments like traffic control, robotics or large-scale simulations where agents need to collaborate or make decisions independently.

Example: A warehouse robot might use:

- Model-based reflexes for navigation

- Goal-based planning for task sequencing

- Utility-based decision-making for prioritizing tasks

- Learning capabilities for route optimization

7. Hierarchical agents

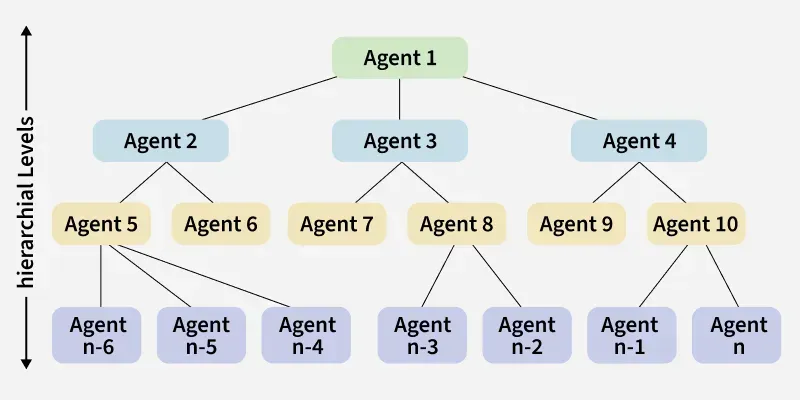

Hierarchical Agent Working

Hierarchical Agent WorkingHierarchical agents organize behavior into multiple layers such as strategic, tactical and operational. Higher levels make abstract decisions that break down into more specific subgoals for lower levels to execute. This structure improves scalability, reusability of skills and management of complex tasks, but requires designing effective interfaces between layers.

Key Characteristics:

- Structured Decision-Making: Decision-making is divided into different levels for more efficient task handling.

- Task Division: Complex tasks are broken down into simpler subtasks.

- Control and Guidance: Higher levels direct lower levels for coordinated action.

When to Use: They are useful in scenarios where tasks can be broken into distinct stages such as robotics or industrial automation.

Example: Drone delivery systems in which fleet management is done at top level and individual navigation at lower level.

Comparison of AI Agent Types

| Agent Type | Main Strength | Limitations | Best For | Example |

|---|

| Simple Reflex Agent | Instant reaction based on fixed rules | No memory or learning; fails in dynamic environments | Fully observable, stable and simple environments | Traffic light timers |

|---|

| Model-Based Reflex Agent | Handles partial observability with internal state | More computational demand; depends on model accuracy | Dynamic or partially observable environments | Robot vacuum cleaners |

|---|

| Goal-Based Agent | Plans ahead to achieve specific objectives | Needs clear goals and planning algorithms | Strategic tasks with defined goals | Logistics route planning |

|---|

| Utility-Based Agent | Balances multiple factors for best outcome | Requires complex utility functions | Multi-criteria decision-making | Financial portfolio management |

|---|

| Learning Agent | Improves over time via experience | Needs data and training time | Dynamic environments with changing conditions | AI chatbots |

|---|

| Multi-Agent System (MAS) | Distributed problem-solving with cooperation or competition | Complex interactions; unpredictable behaviors | Decentralized, multi-entity systems | Smart traffic control |

|---|

| Hierarchical Agent | Breaks complex tasks into levels for efficiency | Requires well-defined interfaces between layers | Large-scale, multi-level operations | Drone delivery management |

|---|

When to Use Each AI Agent Type

1. Simple Reflex Agent

- Environment is fully observable and predictable

- Tasks are repetitive with fixed rules

2. Model-Based Reflex Agent

- Some information about the environment is hidden but can be modeled

- Environment changes but follows predictable patterns

3. Goal-Based Agent

- Tasks require planning multiple steps ahead

- Clear goals are defined and can be measured

4. Utility-Based Agent

- Need to balance trade-offs like cost, time and risk

- Multiple objectives must be prioritized

5. Learning Agent

- Environment changes over time and the system must adapt

- Performance should improve with experience

6. Multi-Agent System (MAS)

- Multiple agents must work together or compete

- Problem-solving is decentralized and distributed

7. Hierarchical Agent

- Tasks can be split into strategic, tactical and operational levels

- Large-scale operations require coordination between layers

Explore

Introduction to AI

AI Concepts

Machine Learning in AI

Robotics and AI

Generative AI

AI Practice