Importance of Convolutional Neural Network | ML

Last Updated :

13 Aug, 2025

Convolutional Neural Networks (CNNs) are a specialized type of neural network that utilize convolution operations to classify and predict, especially in tasks involving visual data such as images and videos.

Let's see the importance of CNN,

1. Weight Sharing and Local Spatial Coherence

CNNs take advantage of the spatial structure in data. Instead of learning separate parameters for each input feature, convolutional layers use a set of shared weights (kernels) that slide across the input image. This means the same feature detector is used at every position, drastically reducing the total number of parameters.

- Benefit: Significant reduction in computational cost, making CNNs efficient even on low-power GPUs or machines without dedicated graphics cards.

2. Memory Efficiency

Because of weight sharing and the nature of convolutions, CNNs generally require far fewer parameters than fully connected neural networks.

- Example: On the MNIST dataset, a simple CNN with one hidden layer and 10 output nodes might use only a few hundred parameters, whereas a fully connected neural network with similar capacity could require upwards of 19,000 parameters for the same task.

- Benefit: Lower memory requirements make CNNs suitable for devices with limited resources and also reduce overfitting risk.

3. Robustness to Local Variations

CNNs are specifically designed to extract features from different regions of an image, making them robust to small shifts and variations in the input. Example: If a fully connected network is trained for face recognition using only head-shot images, it may fail when presented with full-body images. However, a CNN can adapt to such changes and still recognize faces because it focuses on spatial features rather than exact positions.

Equivariance refers to the property where a transformation applied to the input results in the same transformation in the output. In CNNs, convolution operations are equivariant to translations: if an object shifts in the image, its feature map also shifts correspondingly.

- Mathematical Formulation: If f is a convolution operation and g is a transformation (like translation), then f(g(x))=g(f(x)).

- Benefit: This property helps the model reliably detect features even when they move within the input, increasing reliability and consistency in predictions.

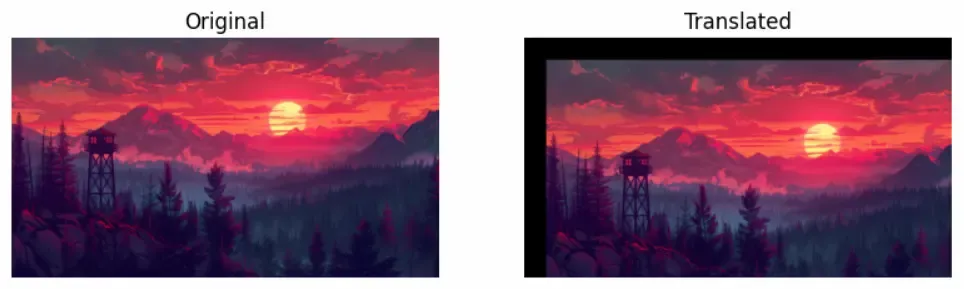

CNNs exhibit relative invariance to common geometric transformations such as translation, rotation and scaling. This means they can recognize objects regardless of their orientation or size within the image.

- Translation Invariance Example: A CNN can detect the same object even if it's shifted to different parts of the image.

Original vs Translated Image Example

Original vs Translated Image Example- Rotation Invariance Example: A CNN can still correctly identify an object if it is rotated, improving versatility for real-world applications.

Original vs Rotated Image Example

Original vs Rotated Image ExampleProperties of CNN and their Importance

Property | Why it Matters | CNN Advantage over Traditional Networks |

|---|

Weight Sharing | Reduces parameters | Faster computation, less memory usage |

|---|

Local Feature Extraction | Leverages spatial coherence | Robust to local variations |

|---|

Equivariance | Predictable output changes | Reliable under input transformations |

|---|

Memory Efficiency | Fewer model parameters | Lower risk of overfitting |

|---|

Convolutional Neural Network (CNN) in Deep Learning

Explore

Deep Learning Basics

Neural Networks Basics

Deep Learning Models

Deep Learning Frameworks

Model Evaluation

Deep Learning Projects