I’ve been playing around with Bayesian Probabilistic PCA. Conceptually, the model is very simple, but the main problem with it is that while priors take care of location and scale invariance, by default the solutions are only unique up to an orthonormal rotation.

There has been a fair bit of discussion on this topic in this forum before, most notably in here which even resulted in an example in the library.

The conclusion reached there on the matter was from Chartl who proposed

Set loadings to lower-triangular (no more rotation invariance)

Set diagonal to positive (no more sign invariance)

Order diagonal (no more permutation invariance)

Thing is, this approach does no longer work for k>3 (which is easy to verify by setting k=5 in the example notebook).

I’ve been racking my brain and trying to find sources for this for the past few days and I think I figured it out, but I would like someone else to verify my reasoning. Hence the post.

The idea of forcing the matrix lower-triangular with positive diagonal elements seems to trace back to Geweke, Zhou 1996: “Measuring the Pricing Error of the Arbitrage Pricing Theory”. In reading the paper itself, however, there is an important yet easy to overlook caveat to their reasoning: namely, they assume that the weight matrix (f in their notation) is semi-orthonormal i.e. f @ f.T = I and that assumption is required in the theorem they use to show rotational invariance (end of pg10).

Now, from what I remember from my university linear algebra, to get an orthonormal matrix, you can start with any vector, then pick the next vector from the orthogonal space of the first vector as the second, then one from the orthogonal space of first two vectors as third and so on, i.e. you have one dimension of freedom less on each pick, effectively removing k(k-1)/2 degrees of freedom. Now forcing the matrix constructed that way to also be lower diagonal removes an additional k(k-1)/2 degrees. So it seems to me that instead of fixing k(k-1)/2 values for identification, one should actually fix twice that i.e. k(k-1).

I tested the library example with the following W construction:

def makeW2(d, k, dim_names):

z = pm.HalfCauchy("W_z", 1.0, dims="latent_columns")

L = pm.Cauchy("W_b", 0.0, 1.0, shape=(d-k,k), dims=("packed_dim",dim_names[1]))

W = pm.Deterministic("W", pt.concatenate([pt.diag(z),L],axis=0), dims=dim_names)

print(W.shape.eval(),L.shape.eval())

return W

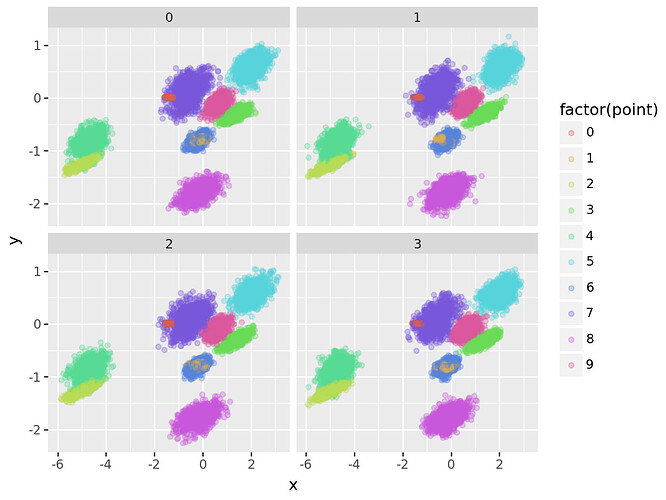

and that seems to work for k=4, k=5, meaning the chains converge and average rhat is 1.01. So the logic seems sound.

Granted, starting with a diagonal matrix in the weights, while identifying the model probably also skews the probability space by forcing first 3 questions all on separate factors. So it is definitely not ideal. So the question I have is: GZ96 seem to assume you can just sample orthonormal matrices without (at least seemingly) elaborating on how. Is there something obvious I am missing?