Apache Hive Installation With Derby Database And Beeline

Last Updated :

29 Jun, 2021

Apache hive is a data warehousing and powerful ETL(Extract Transform And Load) tool built on top of Hadoop that can be used with relational databases for managing and performing the operations on RDBMS. It is written in Java and was released by the Apache Foundation in the year 2012 for the people who are not very much comfortable with java. Hive uses HIVEQL language which syntax is very much similar to SQL syntax. HIVE supports C++, Java, and Python programming language. We can handle or query petabytes of data with hive and SQL.

Derby is also an open-source relational database tool that comes with a hive(by default) and is owned by apache. Nowadays, From an industry perspective, the derby is used for only testing purposes, and for deployment purposes, Metastore of MySql is used.

Prerequisite: Hadoop should be pre-installed.

Step 1: Download the Hive version 3.1.2 from this Link

Step 2: Place the downloaded tar file at your desired location(In our case we are placing it in the /home directory).

Step 3: Now extract the tar file with the help of the command shown below.

tar -xvzf apache-hive-3.1.2-bin.tar.gz

Step 4: Now we have to place the hive path in the .bashrc file For that use the below command.

sudo gedit ~/.bashrc

HIVE path (add the correct path and hive version name)

export HIVE_HOME="/home/dikshant/apache-hive-3.1.2-bin"

export PATH=$PATH:$HIVE_HOME/bin

Place the HIVE path inside this .bashrc file (don't forget to save, press CTRL + S). Check lines 122 and 123 in the below image for reference.

Step 5: Now add the below property to the core-site.xml file. We can find the file in /home/{user-name}/hadoop/etc/hadoop directory. For simplicity, we have renamed my hadoop-3.1.2 folder to Hadoop only.

# to change the directory

cd /home/dikshant/hadoop/etc/hadoop/

# to list the directory content

ls

# to open and edit core-site.xml

sudo gedit core-site.xml

Property's (do not remove the previously added Hadoop properties)

<property>

<name>hadoop.proxyuser.dikshant.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.dikshant.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.server.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.server.groups</name>

<value>*</value>

</property>

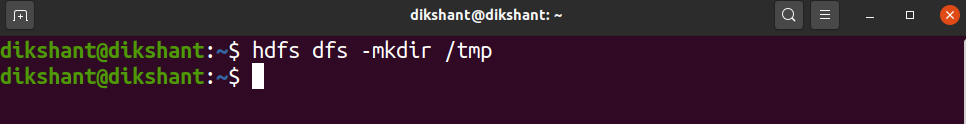

Step 6: Now create a directory with name /tmp in HDFS with the help of the below command.

hdfs dfs -mkdir /tmp

Step 7: Use the below-given command to create a warehouse, hive, and user directory which we will use to store our tables and other data.

hdfs dfs -mkdir /user

hdfs dfs -mkdir /user/hive

hdfs dfs -mkdir /user/hive/warehouse

Now, Check whether the directories are created successfully or not with the help of the below command.

hdfs dfs -ls -R / #switch -R will help -ls to recursively show /(root) hdfs data

Step 8: Now give read, write, and execute permission to all the users of these created directories with the help of the below commands.

hdfs dfs -chmod ugo+rwx /tmp

hdfs dfs -chmod ugo+rwx /user/hive/warehouse

Step 9: Go to /apache-hive-3.1.2-bin/conf directory and change the file name of hive-default.xml.template to hive-site.xml. Now in this file go to line no. 3215 and remove  because this will give you an error while initializing the derby database and since it is in the description it is not very much important for us.

Then,

Now,

Step 10: Now initialize the derby database since HIVE by default uses the derby database for storage and other perspectives. Use the below-given command (make sure you are in the apache-hive-3.1.2-bin directory ).

bin/schematool -dbType derby -initSchema

Step 11: Now launch the HiveServer2 using the below command.

hiveserver2

Step 12: Type the below commands on the different tab, To launch the beeline command shell.

cd /home/dikshant/apache-hive-3.1.2-bin/bin/

beeline -n dikshant -u jdbc:hive2://localhost:10000 (If you face any problem try to use hadoop instead of your user name)

Now we have successfully configured and installed apache hive with derby database.

Step 13: Let's use the show databases command to check it is working fine or not.

show databases;

Similar Reads

How to install and configure Apache Tomcat 10 on Godaddy Server?

GoDaddy VPS is a shared server that provides computational services, databases, storage space, automated weekly backups, 99% uptime, and much more. It’s a cheaper alternative to some other popular cloud-based services such as AWS, GPC, and Azure. Apache Tomcat is a cross-platform HTTP web server tha

3 min read

How to install and configure Apache Web Server on Godaddy Server?

GoDaddy VPS is a shared server that provides computational services, databases, storage space, automated weekly backups, 99% uptime, and much more. It’s a cheaper alternative to some other popular cloud-based services such as AWS, GPC, and Azure. Apache HTTP Server is an open-source web server softw

2 min read

How to Install Apache Cassandra on Ubuntu?

Apache Cassandra is an open-source NoSQL database engine with fault tolerance, linear scalability, and consistency across many nodes. Apache Cassandra's distributed architecture allows it to manage massive amounts of data with dynamo-style replication. It is ideal for handling large amounts of data

5 min read

How to Install and Run Apache Kafka on Windows?

Apache Kafka is an open-source application used for real-time streams for data in huge amount. Apache Kafka is a publish-subscribe messaging system. A messaging system lets you send messages between processes, applications, and servers. Broadly Speaking, Apache Kafka is software where topics can be

2 min read

How to Configure the Eclipse with Apache Hadoop?

Eclipse is an IDE(Integrated Development Environment) that helps to create and build an application as per our requirement. And Hadoop is used for storing and processing big data. And if you have requirements to configure eclipse with Hadoop then you can follow this section step by step. Here, we wi

2 min read

How to Install Apache CouchDB 2.3.0 in Linux?

Apache CouchDB (CouchDB) is a NoSQL document database that collects and stores data in JSON-formatted documents. CouchDB, unlike relational databases, uses a schema-free data model, making record management simpler across a range of computing devices, mobile phones, and web browsers. CouchDB was fir

2 min read

How to Install Oracle Database 11g on Windows?

Oracle Database (known as Oracle RDBMS) is a Database Management System produced and marketed by Oracle Corporation. The Most Fundamental and common usage of Oracle Database is to store a Pre-Defined type of Data. It supports the Structured Query language (SQL) to Manage and Manipulate the Data that

4 min read

How To Install apache2-dev on Ubuntu

The Apache HTTP Server Project's purpose is to provide a standards-compliant open-source HTTP server that is secure, efficient, and extensible. As a result, it has long been the most used web server on the Internet. This package contains development headers and the apxs2 binary for the Apache 2 HTTP

3 min read

How to Install Apache Pig in Linux?

Pig is a high-level platform or tool which is used to process large datasets. It provides a high-level of abstraction for processing over the MapReduce. It provides a high-level scripting language, known as Pig Latin which is used to develop the data analysis codes. In order to install Apache Pig, y

2 min read

How to Configure Windows to Build a Project Having Apache Spark Code Without Installing it?

Apache Spark is a unified analytics engine and it is used to process large scale data. Apache spark provides the functionality to connect with other programming languages like Java, Python, R, etc. by using APIs. It provides an easy way to configure with other IDE as well to perform our tasks as per

5 min read