LangChain is a framework designed to help developers build applications that leverage large language models efficiently. It provides tools for chaining LLMs with data, APIs and external systems to create intelligent, context aware workflows.

Types

TypesImportance of Integrations in Workflows

- Access to External Data: Integrations allow LangChain to fetch real-time or domain-specific data from APIs, databases or documents.

- Enhanced Functionality: By connecting to tools and services, workflows can perform complex tasks beyond text generation.

- Automation: Integrations enable automated processes like sending emails, updating records or triggering actions based on LLM outputs.

- Scalability: Workflows can handle larger tasks efficiently by leveraging external systems for storage, computation or retrieval.

- Improved Accuracy: Integrations with vector databases or knowledge bases help LLMs provide more relevant and precise responses.

- Seamless User Experience: Combining multiple systems creates smoother, end to end applications for users.

- Debugging and Monitoring: Integrated tracing and logging tools help track workflow execution and identify issues quickly.

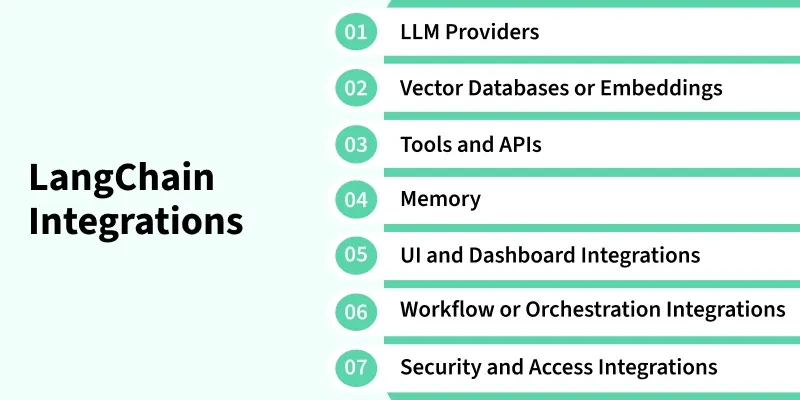

Types of LangChain Integrations

1. LLM Providers: Connect to models like OpenAI, Cohere or Hugging Face for text generation, summarization and more.

- OpenAI API: Connect LangChain to OpenAI models for advanced text generation, summarization and conversation.

- Cohere or Anthropic or Hugging Face Models: Use alternative LLM providers to balance cost, performance and domain specific capabilities.

- Custom LLMs: Integrate your own trained or fine-tuned models for specialized tasks and proprietary data.

Python

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-4", temperature=0.7)

response = llm.invoke("Summarize LangChain in one sentence.")

print(response.content)

Here, we have used OpenAI API.

Refer to this article: Fetching OpenAI API Key

2. Vector Databases or Embeddings: Use Pinecone, Weaviate or FAISS to store and retrieve embeddings for semantic search and relevance.

- Data Retrieval Integrations: Access and retrieve information efficiently for LangChain workflows.

- Vector Stores: Store and search embeddings for semantic similarity and quick retrieval.

- Document Loaders: Import data from PDFs, websites or databases to provide context-aware responses.

Python

from langchain_community.vectorstores import Pinecone

from langchain_openai import OpenAIEmbeddings

import pinecone

pinecone.init(api_key="YOUR_PINECONE_KEY", environment="us-west1-gcp")

embeddings = OpenAIEmbeddings()

index = Pinecone.from_texts(["LangChain enables LLM apps"], embeddings, index_name="langchain-demo")

Here, we have used Pinecone.

Refer to this article: Using and Setting Vector Database

3. Tools and APIs: Integrate external tools or APIs to perform actions, fetch data or automate tasks.

- Tool Integrations: Extend LangChain’s capabilities by connecting to external tools and services.

- Python Functions and Scripts: Execute custom Python code directly within workflows.

- APIs for Automation: Integrate APIs to fetch data, trigger actions or automate tasks.

- Agents with Tool Use: Enable intelligent agents to use tools and perform multi step reasoning.

Python

import requests

from langchain.tools import tool

@tool

def get_weather(city: str) -> str:

data = requests.get(f"https://wttr.in/{city}?format=3").text

return data

Here, we have integrated API.

4. Memory: Manage context using in-memory or persistent storage like Redis, SQLite or Pinecone.

- In-Memory vs Persistent Storage: In-memory storage keeps short-term context for quick access while persistent storage retains data across sessions.

- Redis, SQLite or Pinecone Integration: Use Redis for fast caching, SQLite for local persistence or Pinecone for vector based long term memory.

Python

from langchain.memory import RedisChatMessageHistory

history = RedisChatMessageHistory(url="redis://localhost:6379", ttl=600)

history.add_message("User: Hello LangChain!")

print(history.messages)

Here, we have used Redis.

5. UI and Dashboard Integrations: Build interactive interfaces and monitor workflows effectively.

- Streamlit or Gradio Integration: Create user-friendly web apps to interact with LangChain workflows.

- LangSmith or Tracing Dashboards: Visualize and trace workflow execution for debugging and optimization.

Python

import streamlit as st

from langchain.chat_models import ChatOpenAI

st.title("LangChain Chat")

q = st.text_input("Ask:")

if q:

llm = ChatOpenAI(model="gpt-3.5-turbo")

st.write(llm.invoke(q).content)

Here, we have used Streamlit.

6. Workflow or Orchestration Integrations: Coordinate multiple components and manage complex processes.

- Chaining LLMs with Pipelines: Connect multiple LLMs and tools in a sequential or parallel workflow.

- Event Driven and Async Workflows: Handle asynchronous tasks and trigger actions based on events.

Python

import asyncio

from langchain_openai import ChatOpenAI

async def run_async():

llm = ChatOpenAI(model_name="gpt-4")

result = await llm.ainvoke("Explain async workflows in LangChain.")

print(result.content)

asyncio.run(run_async())

Here, we have illustrated asyc workflow.

7. Security and Access Integrations: Protect workflows and control access to sensitive data.

- API Key Management: Securely manage and store API credentials for LLMs and services.

- Authentication and Permissions: Control user access and enforce proper authorization for workflows.

Python

def verify_user(token):

if token != os.getenv("ACCESS_TOKEN"):

raise PermissionError("Unauthorized access!")

Here, we have illustrated authentication and permissions.

Applications

Some of the applications of LangChain Integrations are:

- Intelligent Chatbots and Virtual Assistants: Combine LLMs with APIs, knowledge bases and tools to create conversational agents that can perform tasks.

- Automated Data Retrieval and Analysis: Fetch and process data from PDFs, websites, databases or APIs for summaries, insights or reports.

- Workflow Automation: Use agents and tool integrations to automate multi-step business processes or repetitive tasks.

- Semantic Search and Question Answering: Vector databases and embeddings enable LLMs to answer queries with context-aware, accurate results.

- Custom Applications: Fine-tuned or custom LLMs can be integrated for domain-specific applications in healthcare, finance, education, etc.

- Monitoring and Debugging AI Workflows: Dashboard integrations like LangSmith, Streamlit, Gradio allow visualization and tracing of workflows.

Limitations

Some of the limitations of LangChain Integrations are:

- Complexity: Integrating multiple tools, APIs and memory systems can make workflows difficult to design and maintain.

- Performance Overhead: Chaining multiple LLM calls or retrieving data from external sources can slow down response times.

- Cost: Frequent API calls, LLM usage and vector store operations may increase operational costs.

- Data Privacy and Security: External integrations may expose sensitive data if not properly secured or compliant with regulations.

- Dependency on External Services: Downtime or changes in external APIs or databases can break workflows.

Explore

Introduction to AI

AI Concepts

Machine Learning in AI

Robotics and AI

Generative AI

AI Practice