Caching - System Design Concept

Last Updated :

20 Dec, 2024

Caching is a system design concept that involves storing frequently accessed data in a location that is easily and quickly accessible. The purpose of caching is to improve the performance and efficiency of a system by reducing the amount of time it takes to access frequently accessed data.

What is Caching?

Let's imagine a library where books are stored on shelves. Retrieving a book from a shelf takes time, so a librarian decides to keep a small table near the entrance. This table is like a cache, where the librarian places the most popular or recently borrowed books.

.jpg)

Now, when someone asks for a frequently requested book, the librarian checks the table first. If the book is there, it's quickly provided. This saves time compared to going to the shelves each time. The table acts as a cache, making popular books easily accessible.

- The same things happen in the system. In a system accessing data from primary memory (RAM) is faster than accessing data from secondary memory (disk).

- Caching acts as the local store for the data and retrieving the data from this local or temporary storage is easier and faster than retrieving it from the database.

- Consider it as a short-term memory that has limited space but is faster and contains the most recently accessed items.

Why you cannot store all the data in cache?

As you know there are many benefits of the cache but that doesn't mean we will store all the information in the cache memory for faster access, we can't do this for multiple reasons, such as:

- Hardware of the cache which is much more expensive than a normal database.

- Also, the search time will increase if you store tons of data in your cache.

- Cache is typically a volatile storage, meaning data is lost if the system crashes or restarts. For critical and long-term data, storing it only in cache would risk data loss.

- So in short a cache needs to have the most relevant information according to the request which is going to come in the future.

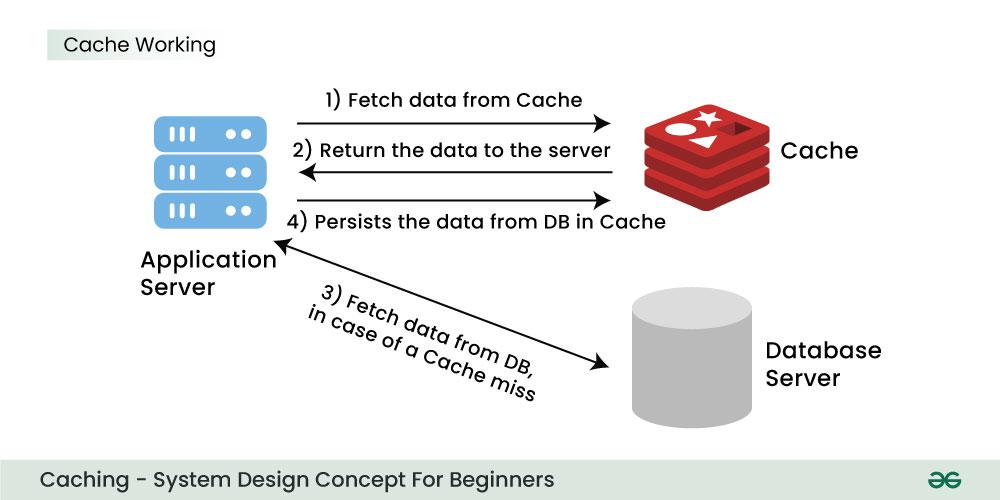

How Does Cache Work?

Typically, web application stores data in a database. When a client requests some data, it is fetched from the database and then it is returned to the user. Reading data from the database needs network calls and I/O operations which is a time-consuming process. Cache reduces the network calls to the database and speeds up the performance of the system.

Lets understand how cache work with the help of an example:

Let's take the example of twitter, when a tweet becomes viral, a huge number of clients request the same tweet. Twitter is a gigantic website that has millions of users. It is inefficient to read data from the disks for this large volume of user requests.

Here is how using cache helps to resolve this problem:

- To reduce the number of calls to the database, we can use cache and the tweets can be provided much faster.

- In a typical web application, we can add an application server cache, and an in-memory store like Redis alongside our application server.

- When the first time a request is made a call will have to be made to the database to process the query. This is known as a cache miss.

- Before giving back the result to the user, the result will be saved in the cache.

- When the second time a user makes the same request, the application will check your cache first to see if the result for that request is cached or not.

- If it is then the result will be returned from the in-memory store. This is known as a cache hit.

- The response time for the second time request will be a lot less than the first time.

Types of Cache

In common there are four types of Cache:

1. Application Server Cache

An Application Server Cache is a storage layer within an application server that temporarily holds frequently accessed data, so it can be quickly retrieved without needing to go back to the main database each time. This helps applications run faster by reducing the load on the database and speeding up response times for users.

For example:

When an app frequently needs certain data, like user profiles or product lists, the application server can store this data in the cache. When users request it, the app can instantly provide the cached version instead of processing a full database query.

.webp)

Drawbacks of Application Server Cache

When you add multiple servers to handle a high volume of requests. With several servers, a load balancer sends requests to different nodes, but each node only has its own cache and doesn’t know about the cached data on other nodes.

- This results in many cache misses, meaning the data has to be re-fetched frequently, slowing things down.

- To fix this, there are two main options: Distributed Cache and Global Cache.

In the distributed cache, each node will have a part of the whole cache space, and then using the consistent hashing function each request can be routed to where the cache request could be found.

Let's suppose we have 10 nodes in a distributed system, and we are using a load balancer to route the request then.

- Each of its nodes will have a small part of the cached data.

- To identify which node has which request the cache is divided up using a consistent hashing function, so that each request can be routed to where the cached request could be found.

- If a requesting node is looking for a certain piece of data, it can quickly know where to look within the distributed cache to check if the data is available.

3. Global Cache

As the name suggests, you will have a single cache space and all the nodes use this single space. Every request will go to this single cache space. There are two kinds of the global cache

- First, when a cache request is not found in the global cache, it's the responsibility of the cache to find out the missing piece of data from anywhere underlying the store (database, disk, etc).

- Second, if the request comes and the cache doesn't find the data then the requesting node will directly communicate with the DB or the server to fetch the requested data.

A CDN is essentially a group of servers that are strategically placed across the globe with the purpose of accelerating the delivery of web content. A CDN-

- Manages servers that are geographically distributed over different locations.

- Stores the web content in its servers.

- Attempts to direct each user to a server that is part of the CDN and close to the user so as to deliver content quickly.

CDN is used where a large amount of static content is served by the website. This can be an HTML file, CSS file, JavaScript file, pictures, videos, etc. First, request ask the CDN for data, if it exists then the data will be returned. If not, the CDN will query the backend servers and then cache it locally.

Applications of Caching

Caching is used in many areas to speed up processes, reduce load, and make systems more efficient. Below are some common applications of caching:

- Web Page Caching: In order to speed up loading times in the future, browsers save copies of frequently visited websites. This saves bandwidth and shortens the time it takes for a web page to load.

- Database Caching: Frequent database queries can strain servers and cause lag. Caching allows apps to quickly retrieve frequently used data without repeatedly asking the database by storing it in memory.

- Content Delivery Networks (CDNs): CDNs use caching to keep copies of data (such as pictures and videos) in several places throughout the globe. This enhances website performance by enabling visitors to obtain content more quickly from a nearby server.

- Session Caching: Applications store session data in a cache to remember user information (like login status) between visits, making the experience seamless and personalized without needing to re-login.

- API Response Caching: Frequently requested API data, like stock prices or weather data, can be cached so responses are faster, reducing the load on the server and delivering data in real-time.

Advantages of using Caching

As it maximizes resource utilization, reduces server loads, and enhances overall scalability, caching is a helpful technique in software development.

- Improved performance: By significantly reducing down on the time it takes to get frequently used data, caching can enhance system responsiveness and performance.

- Reduced load on the original source: By significantly reducing down on the time it takes to get frequently used data, caching can enhance system responsiveness and performance.

- Cost savings: Caching can reduce the need for expensive hardware or infrastructure upgrades by improving the efficiency of existing resources.

Disadvantages of using Caching?

Despite its advantages, caching comes with drawbacks also and some of them are:

- Data inconsistency: If cache consistency is not maintained properly, caching can introduce issues with data consistency.

- Cache eviction issues: If cache eviction policies are not designed properly, caching can result in performance issues or data loss.

- Additional complexity: Caching can add additional complexity to a system, which can make it more difficult to design, implement, and maintain.

For systems that use caching to improve performance, cache invalidation is essential. Data is temporarily kept for faster access when it is cached. However, the cached version goes out of date if the original data changes. In order to guarantee that users obtain the most recent information, cache invalidation techniques make sure that out-of-date records are either updated or deleted.

- Common strategies include time-based expiration, where cached data is discarded after a certain time, and event-driven invalidation, triggered by changes to the underlying data.

- Proper cache invalidation optimizes performance and avoids serving users with obsolete or inaccurate content from the cache.

For caching systems to effectively manage their limited cache capacity, eviction policies are essential. An eviction policy decides which existing item to remove when the cache is full and a new item needs to be stored.

- The Least Recently Used (LRU) policy is a popular strategy that eliminates the item that has been accessed the least recently. According to this assumption, items which have been used recently are more likely to be utilized again shortly.

- Another method is the Least Frequently Used (LFU) policy, removing the least frequently accessed items.

- Alternatively, there's the First-In-First-Out (FIFO) policy, evicting the oldest cached item.

Roadmap to learn Caching

1. Basics of Caching

2. Types and Strategies of Caching

3. Advanced Topics in Caching

4. Popular Cache Providers

5. Case Study

Conclusion

Caching is becoming more common nowadays because it helps make things faster and saves resources. The internet is witnessing an exponential growth in content, including web pages, images, videos, and more. Caching helps reduce the load on servers by storing frequently accessed content closer to the users, leading to faster load times. Real-time applications, such as online gaming, video streaming, and collaborative tools, demand low-latency interactions. Caching helps in delivering content quickly by storing and serving frequently accessed data without the need to fetch it from the original source every time.

Similar Reads

What is High Level Design? – Learn System Design HLD plays a significant role in developing scalable applications, as well as proper planning and organization. High-level design serves as the blueprint for the system's architecture, providing a comprehensive view of how components interact and function together. This high-level perspective is impo

9 min read

Difference between High Level Design(HLD) and Low Level Design(LLD) System design involves creating both a High-Level Design (HLD), which is like a roadmap showing the overall plan, and a Low-Level Design (LLD), which is a detailed guide for programmers on how to build each part. It ensures a well-organized and smoothly functioning project. High-Level Design and Low

4 min read

What is Load Balancer & How Load Balancing works? A load balancer is a crucial component in system design that distributes incoming network traffic across multiple servers. Its main purpose is to ensure that no single server is overburdened with too many requests, which helps improve the performance, reliability, and availability of applications.Ta

9 min read

What is Content Delivery Network(CDN) in System Design These days, user experience and website speed are crucial. Content Delivery Networks (CDNs) are useful in this situation. It promotes the faster distribution of web content to users worldwide. In this article, you will understand the concept of CDNs in system design, exploring their importance, func

8 min read

Caching - System Design Concept Caching is a system design concept that involves storing frequently accessed data in a location that is easily and quickly accessible. The purpose of caching is to improve the performance and efficiency of a system by reducing the amount of time it takes to access frequently accessed data.Table of C

10 min read

What is API Gateway | System Design? An API Gateway is a key component in system design, particularly in microservices architectures and modern web applications. It serves as a centralized entry point for managing and routing requests from clients to the appropriate microservices or backend services within a system.Table of ContentWhat

9 min read

Message Queues - System Design Message queues enable communication between various system components, which makes them crucial to system architecture. Because they serve as buffers, messages can be sent and received asynchronously, enabling systems to function normally even if certain components are temporarily or slowly unavaila

9 min read

Consistent Hashing - System Design Consistent hashing is a distributed hashing technique used in computer science and distributed systems to achieve load balancing and minimize the need for rehashing when the number of nodes in a system changes. It is particularly useful in distributed hash tables (DHTs), distributed caching systems,

10 min read

Communication Protocols in System Design Modern distributed systems rely heavily on communication protocols for both design and operation. They facilitate smooth coordination and communication by defining the norms and guidelines for message exchange between various components. Building scalable, dependable, and effective systems requires

6 min read

Network Protocols and Proxies in System Design In the system design, the effective functioning of networks is essential for seamless communication and data transfer. Network protocols and proxies play important roles in shaping the structure of the system, ensuring efficient data transmission, and improving security. This article explores the si

13 min read