Kubernetes Node Affinity and Anti-Affinity: Advanced Scheduling

Last Updated :

16 Sep, 2025

Before Node Affinity, we had two main tools for controlling Pod placement, each with significant limitations.

nodeSelector: This is the simplest form of constraint. You specify a map of key-value pairs in the Pod spec, and the Pod will only be scheduled on nodes that have all of those exact labels. The limitation is its rigidity; it only supports simple AND logic and cannot express preferences or more complex conditions like OR or NOT IN.- Taints and Tolerations: This system works by repelling Pods. A taint on a node acts as a "gate," and only Pods with a matching toleration are allowed through. The primary limitation is that it's a mechanism for exclusion, not attraction. A Pod with a toleration is allowed to run on a tainted node, but it's not guaranteed to. The scheduler might still place it on a different, non-tainted node if that node has a higher overall score.

Node Affinity was created to overcome these limitations by providing a Pod-centric way to express complex attraction rules.

Node Affinity

Node Affinity allows you to constrain which nodes your Pod can be scheduled on, based on the labels of those nodes. It have two variations.

1. requiredDuringSchedulingIgnoredDuringExecution

- What it is: This is a hard requirement, similar to

nodeSelector. The scheduler must find a node that satisfies the specified rules. If no such node exists, the Pod will remain in the Pending state. - Breaking down the name:

requiredDuringScheduling: The rule is mandatory when the scheduler is first placing the Pod.IgnoredDuringExecution: If the labels on the node change after the Pod is already running, the Pod will not be evicted. It will continue running on that node.

2. preferredDuringSchedulingIgnoredDuringExecution

- What it is: This is a soft preference. The scheduler will try to find a node that satisfies the rules and give it a higher score, making it a more attractive choice. However, if a suitable node cannot be found, the scheduler will place the Pod on another valid node.

- The

weight field: Each preference is assigned a weight between 1 and 100. When the scheduler finds a node that matches a preference, it adds the weight to that node's score. A higher weight makes a rule more important.

These rules use powerful matchExpressions with operators like In, NotIn, Exists, and DoesNotExist, making them far more expressive than nodeSelector.

Implementation of Node Affinity in Kubernetes

Step 1: Create Node Labels

First of all, you need to label your nodes to use Node Affinity or Anti-Affinity. Nodes contain labels, and key-value pairs, attached by which identification is useful when using this by certain criteria.

kubectl label nodes <node-name> <key>=<value>

Output:

Next, Make a Pod manifest, containing the Node Affinity rules. The following example makes sure that the Pod is only scheduled on nodes that have the disktype=ssd label applied.

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: mycontainer

image: nginx

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disktype

operator: In

values:

- ssd

Step 3: Apply the Manifest

Next, you va to apply the manifest, to apply the manifest and create the pod, using Kubectl.

kubectl apply -f mypod.yaml

Output:

Step 4: Verify Pod Placement

Lastly, Verify if the intended node is where the Pod was scheduled.

kubectl get pod mypod -o wide

Output:

Best Practices of Node Affinity in Kubernetes

- Manage Node Taints and Tolerations: For more precise control over pod placement, combine node affinity with taints and tolerations.

- Monitor and Adjust: Monitor how your node affinity rules impact pod scheduling, and modify them as necessary in light of cluster utilization and performance.

- Use Labels Strategically: Assign labels to nodes according to their hardware specifications, location, or other pertinent information.

- Topology Keys: You can use topology keys to manage pod placement within particular nodes or regions, such as kubernetes.io/hostname or custom labels.

What is Node Anti-Affinity in Kubernetes?

Node anti-affinity specifies restrictions that prevent kubernetes pods from being scheduled on the same or different nodes. It is especially beneficial in high-availability installations, as distributing pods over multiple nodes or zones reduces the chance of a single point of failure. However, in many circumstances, you may want to specify that pods run exclusively on specified nodes in a cluster, or prevent running on specific nodes.

Implementation of Node Anti-Affinity in Kubernetes

Step 1: Create Pod Labels

First, make sure your web server pods are labeled correctly. Here's a sample deployment with labels for our web server pods.

apiVersion: apps/v1

kind: Deployment

metadata:

name: webserver-app-deployment

spec:

replicas: 3

selector:

matchLabels:

app: webserver

template:

metadata:

labels:

app: webserver

spec:

containers:

- name: nginx-container

image: nginx:latest

Step 2: Make a Pod Manifest with Anti-Affinity Rules

You must define Node Anti-Affinity in the affinity section of your deployment manifest or pod to use it. By using this example, you can make sure that node scheduling of pods with the app=frontend label occurs.

apiVersion: v1

kind: Pod

metadata:

name: frontend-pod

labels:

app: frontend

spec:

containers:

- name: nginx-container

image: nginx

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- frontend

topologyKey: "kubernetes.io/hostname"

Step 3: Apply the Manifest

To prevent two Pods with the app=frontend label from being scheduled on the same node, this command will construct the Pod and implement the Anti-Affinity rules.

kubectl apply -f frontend-pod.yaml

Output:

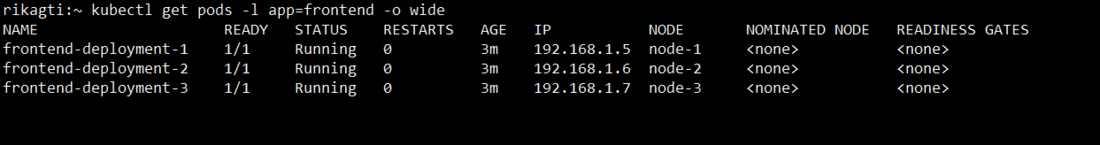

Step 4: Verify Anti-Affinity Behavior

Lastly, After the Pods are operational, you may cross-check where they are located on each node to make sure the Anti-Affinity rules are operating as intended.

kubectl get pods -l app=frontend -o wide

Output:

Best Practices of Node Anti-Affinity in Kubernetes

- Balance Preferences: To keep flexibility in scheduling, use needed rules for crucial limitations and recommended rules to guide without tightly enforcing them.

- Use for High Availability: Spread out pods among several nodes or availability zones using anti-affinity rules to improve fault tolerance and prevent single points of failure.

- Track the Performance of the Cluster: Node affinity and anti-affinity policies have an impact on scheduling efficiency and resource usage in clusters. Keep an eye on your cluster to make sure it stays performant and balanced.

- Specify Proper Weighting: To show the degree of preference without making it mandatory, use weights in preferred anti-affinity rules.

Conclusion

In conclusion, Node Affinity and Anti-Affinity in Kubernetes are significant Kubernetes capabilities that provide fine-grained control over pod placement. Mastering these ideas will allow you to optimize your deployments for performance, reliability, and compliance.

Explore

DevOps Basics

Version Control

CI & CD

Containerization

Orchestration

Infrastructure as Code (IaC)

Monitoring and Logging

Security in DevOps