Floating Point Representation

Last Updated :

08 Oct, 2025

Floating-point representation lets computers work with very large or very small real numbers using scientific notation. IEEE 754 defines this format using three parts: sign, exponent, and mantissa. It includes two main types:

- Single precision (32 bits)

- Double precision (64 bits)

The following description explains terminology and primary details of IEEE 754 binary floating-point representation.

The discussion confines to single and double precision formats. Usually, a real number in binary will be represented in the following format,

ImIm-1...I2I1I0.F1F2...FnFn-1

Where Im and Fn will be either 0 or 1 of integer and fraction parts, respectively.

A finite number can also be represented by four integer components, a sign (s), a base (b), a significant (m), and an exponent (e). Then the numerical value of the number is evaluated as

(-1)s x m x be ________ Where m < |b|

Depending on the base and the number of bits used to encode various components, the IEEE 754 standard defines five basic formats. Among the five formats, the binary32 and the binary64 formats are single precision and double precision formats, respectively, in which the base is 2.

How Floating Point Represent

It is based on scientific notation where numbers are represented as a fraction and an exponent. In computing, this representation allows for trade-off between range and precision.

Format: A floating point number is typically represented as:

Value=Sign × Significand × BaseExponent

where:

- Sign: Indicates whether the number is positive or negative.

- Significand (Mantissa): Represents the precision bits of the number.

- Base: Usually 2 in binary systems.

- Exponent: Determines the scale of the number.

Need for Floating Point Representation

The Floating point representation is crucial because:

- Range: It can represent a wide range of values from the very large to very small numbers.

- Precision: It provides a good balance between the precision and range, making it suitable for the scientific computations, graphics and other applications where exact values and wide ranges are necessary.

- Flexibility: It adapts to different scales of numbers allowing for the efficient storage and computation of real numbers in the computer systems.

Number System and Data Representation

- Number Systems: The Floating point representation often uses binary (base-2) systems for the digital computers. Other number systems like decimal (base-10) or hexadecimal (base-16) may be used in the different contexts.

- Data Representation: This includes how numbers are stored in the computer memory involving binary encoding and the representation of the various data types.

Table - Precision Representation

| Precision | Base | Sign | Exponent | Significant |

|---|

| Single precision | 2 | 1 | 8 | 23+1 |

| Double precision | 2 | 1 | 11 | 52+1 |

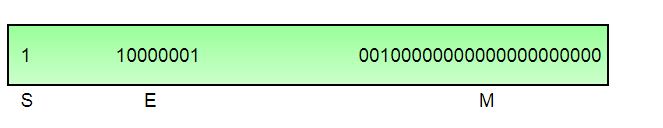

The single precision format has 23 bits for significant (1 represents implied bit, details below), 8 bits for exponent and 1 bit for sign.

For example, the rational number 9÷2 can be converted to single precision float format as following,

9(10) ÷ 2(10) = 4.5(10) = 100.1(2)

A normalized floating-point number includes an implied leading 1 before the binary point, such as 1.001₂ × 2², allowing for greater precision by effectively using 24 bits in single precision. The mantissa stores only the fraction part, excluding implied 1.

Normalized numbers are more accurate than subnormal (de-normalized) numbers, which occur when the exponent is too small to be represented. Subnormal numbers lack the leading 1 and have reduced precision but are useful for representing values very close to zero, preventing abrupt underflow.

For example, (−1)⁰ × 1.001₂ × 2² has a mantissa of 001 and an exponent of 2, and is encoded accordingly in binary floating-point format.

In single-precision floating-point representation, the exponent is stored as a biased exponent to handle both positive and negative values efficiently. For example, if the actual exponent is 2, it is stored as 129 (i.e., 127 + 2), where 127 is the bias. This bias is calculated as 2n-1 - 1, where n is the number of bits for the exponent (8 bits for single precision).

- The biased exponent is stored in plain binary and allows easy comparison of floating-point numbers using simple bitwise operations.

- Unlike sign-magnitude or two’s complement, this method simplifies hardware implementation and sorting of values.

E = e + 127

The range of exponent in single precision format is -126 to +127. Other values are used for special symbols.

Note: When we unpack a floating point number the exponent obtained is the biased exponent. Subtracting 127 from the biased exponent we can extract unbiased exponent.

Double precision uses 64 bits: 1 for sign, 11 for exponent, and 52 for the significant (with 1 implied bit), following the same rules as single precision but with larger fields for greater range and accuracy.

Precision

Precision is the smallest change that can be represented. Single precision offers about 7 decimal digits of accuracy, while double precision provides around 16 decimal digits. This increased precision in double precision format allows for more accurate representation and calculation of very small or large real numbers.

Accuracy

Accuracy depends on the number of significant bits, while range is determined by the exponent. Since not all real numbers can be exactly represented, rounding modes (down, up, toward zero, to nearest) are used to choose the closest representable value.

Special Bit Patterns

Certain patterns represent special cases like zero, which can’t be normalized due to the missing leading 1. Both +0 and -0 are valid and distinct representations of zero in floating-point format.

0 00000000 00000000000000000000000 = +0

1 00000000 00000000000000000000000 = -0

Similarly, the standard represents two different bit patterns for +INF and -INF. The same are given below,

0 11111111 00000000000000000000000 = +INF

1 11111111 00000000000000000000000 = -INF

Subnormal numbers use a special exponent bit pattern, slightly reducing the exponent range but allowing representation of values close to zero.

Undefined operations like 0 × INF or 0 ÷ INF result in NaN (Not a Number), which is represented with all 1s in the exponent and a non-zero significand. Any operation involving NaN also yields NaN.

x 11111111 m0000000000000000000000

Where m can be 0 or 1. This gives us two different representations of NaN.

0 11111111 00000000000000000000001 _____________ Signaling NaN (SNaN)

0 11111111 10000000000000000000001 _____________Quiet NaN (QNaN)

Usually QNaN and SNaN are used for error handling. QNaN do not raise any exceptions as they propagate through most operations. Whereas SNaN are which when consumed by most operations will raise an invalid exception.

Overflow and Underflow

Overflow is said to occur when the true result of an arithmetic operation is finite but larger in magnitude than the largest floating point number which can be stored using the given precision. Underflow is said to occur when the true result of an arithmetic operation is smaller in magnitude (infinitesimal) than the smallest normalized floating point number which can be stored. Overflow can’t be ignored in calculations whereas underflow can effectively be replaced by zero.

Endianness

The IEEE 754 standard defines a binary floating point format. The architecture details are left to the hardware manufacturers. The storage order of individual bytes in binary floating point numbers varies from architecture to architecture.

Applications

- Scientific Computations: Used in simulations, modeling, and calculations requiring the high precision and large ranges.

- Graphics: Essential in the rendering and manipulating graphical data where precise calculations are needed.

- Engineering: The Applied in fields such as aerospace, mechanical engineering and electronics for the accurate measurements and simulations.

Explore

Number Systems

Boolean Algebra and Logic Gates

Minimization Techniques

Combinational Circuits

Sequential Circuits

Conversion of Flip-Flop

Register, Counter, and Memory Unit

LMNs and GATE PYQs