OS Based Solutions in Process Synchronization

Last Updated :

06 Sep, 2025

Operating system-based solutions to the critical section problem use tools like semaphores, Sleep-Wakeup and monitors. These mechanisms help processes synchronize and ensure only one process accesses the critical section at a time. They are widely used in multitasking systems for efficient resource management.

Different OS based solutions for critical section problem are:

- Semaphores

- Monitors

- Sleep-Wakeup

1. Semaphores

A semaphore is a synchronization tool used in concurrent programming to control access to shared resources. It works like a counter that tracks how many processes can use a resource at a time.

- Wait (P) operation: If the semaphore value > 0, it is decremented and the process continues; if 0, the process is blocked.

- Signal (V) operation: Increments the semaphore value or wakes up a blocked process.

Semaphores help prevent race conditions and allow coordination among multiple processes or threads. They are often called counting semaphores since their initial value decides how many processes can access the resource simultaneously

Types of Semaphores:

There are two types of semahore

- Counting Semaphore – Allows multiple processes to access a resource up to a specified limit.

- Binary Semaphore (Mutex) – Works like a simple lock, allowing only one process at a time.

Working of Semaphore

A semaphore is a simple yet powerful synchronization tool used to manage access to shared resources in a system with multiple processes. It works by maintaining a counter that controls access to a specific resource, ensuring that no more than the allowed number of processes access the resource at the same time.

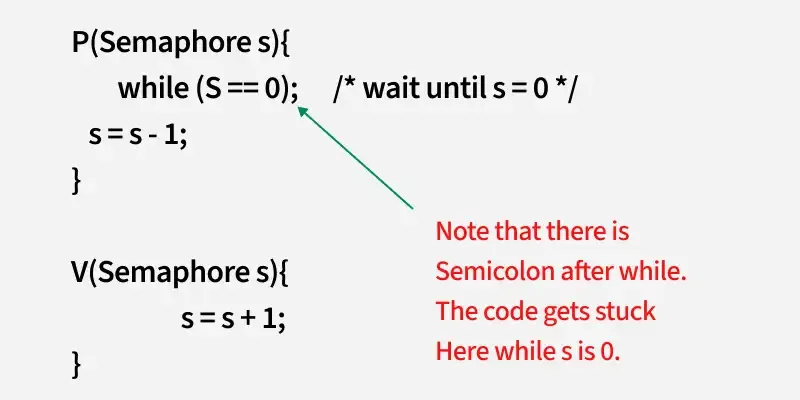

Now let us see how it does so. First, look at two operations that can be used to access and change the value of the semaphore variable.

A critical section is surrounded by both operations to implement process synchronization. The below image demonstrates the basic mechanism of how semaphores are used to control access to a critical section in a multi-process environment, ensuring that only one process can access the shared resource at a time.

Now, let us see how it implements mutual exclusion. Let there be two processes P1 and P2 and a semaphore s is initialized as 1. Now if suppose P1 enters in its critical section then the value of semaphore s becomes 0. Now if P2 wants to enter its critical section then it will wait until s > 0, this can only happen when P1 finishes its critical section and calls V operation on semaphore s.

This way mutual exclusion is achieved. Look at the below image for details which is a Binary semaphore.

Implementation of Binary Semaphores

C++

struct semaphore {

enum value { ZERO, ONE };

value s_value = ONE;

std::queue<process> q;

};

void P(semaphore& s) {

if (s.s_value == semaphore::ONE) {

s.s_value = semaphore::ZERO;

}

else {

// add the process to the waiting queue

s.q.push(P);

sleep();

}

}

void V(semaphore& s) {

if (s.q.empty()) {

s.s_value = semaphore::ONE;

}

else {

// select a process from waiting queue

process p = s.q.front();

// remove the process from waiting as it has been

// sent for CS

s.q.pop();

wakeup(p);

}

}

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <stdbool.h>

typedef struct process {

// process details

} process;

typedef enum { ZERO, ONE } value;

typedef struct semaphore {

value s_value;

process* q[100];

int front, rear;

} semaphore;

void P(semaphore* s) {

if (s->s_value == ONE) {

s->s_value = ZERO;

}

else {

// add the process to the waiting queue

s->q[(++s->rear) % 100] = P;

sleep(1);

}

}

void V(semaphore* s) {

if (s->front == s->rear) {

s->s_value = ONE;

}

else {

// select a process from waiting queue

process* p = s->q[s->front];

// remove the process from waiting as it has been

// sent for CS

s->front = (s->front + 1) % 100;

wakeup(p);

}

}

import java.util.LinkedList;

import java.util.Queue;

enum Value { ZERO, ONE }

class Semaphore {

Value value = Value.ONE;

Queue<Process> q = new LinkedList<>();

}

class Process {}

public class SemaphoreOperations {

public static void P(Semaphore s) {

if (s.value == Value.ONE) {

s.value = Value.ZERO;

} else {

// add the process to the waiting queue

s.q.add(new Process());

try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); }

}

}

public static void V(Semaphore s) {

if (s.q.isEmpty()) {

s.value = Value.ONE;

} else {

// select a process from waiting queue

Process p = s.q.peek();

// remove the process from waiting as it has been

// sent for CS

s.q.remove();

wakeup(p);

}

}

private static void wakeup(Process p) {

// implementation of wakeup

}

}

from queue import Queue

from time import sleep

class Value:

ZERO = 0

ONE = 1

class Process:

pass

class Semaphore:

def __init__(self):

self.value = Value.ONE

self.q = Queue()

def P(s):

if s.value == Value.ONE:

s.value = Value.ZERO

else:

# add the process to the waiting queue

s.q.put(Process())

sleep(1)

def V(s):

if s.q.empty():

s.value = Value.ONE

else:

# select a process from waiting queue

p = s.q.queue[0]

# remove the process from waiting as it has been

# sent for CS

s.q.get()

wakeup(p)

def wakeup(p):

# implementation of wakeup

pass

The description above is for binary semaphore which can take only two values 0 and 1 and ensure mutual exclusion. There is one other type of semaphore called counting semaphore which can take values greater than one.

- A resource has 4 instances, so the semaphore is initialized as S = 4.

- A process requests the resource using P (wait) and releases it using V (signal).

- If S = 0, new processes must wait until a resource is released.

- Example: P1, P2, P3, and P4 take all 4 instances (S = 0). If P5 requests, it waits until one of them calls signal and frees a resource.

Implementation of Counting semaphore

C++

struct Semaphore {

int value;

// q contains all Process Control Blocks(PCBs)

// corresponding to processes got blocked

// while performing down operation.

Queue<process> q;

};

P(Semaphore s)

{

s.value = s.value - 1;

if (s.value < 0) {

// add process to queue

// here p is a process which is currently executing

q.push(p);

block();

}

else

return;

}

V(Semaphore s)

{

s.value = s.value + 1;

if (s.value <= 0) {

// remove process p from queue

Process p = q.pop();

wakeup(p);

}

else

return;

}

typedef struct {

int value;

// q contains all Process Control Blocks(PCBs)

// corresponding to processes got blocked

// while performing down operation.

Queue process_queue;

} Semaphore;

void P(Semaphore *s) {

s->value = s->value - 1;

if (s->value < 0) {

// add process to queue

// here p is a process which is currently executing

queue_push(&s->process_queue, p);

block();

}

}

void V(Semaphore *s) {

s->value = s->value + 1;

if (s->value <= 0) {

// remove process p from queue

Process p = queue_pop(&s->process_queue);

wakeup(p);

}

}

import java.util.LinkedList;

import java.util.Queue;

class Process {}

class Semaphore {

int value;

Queue<Process> q = new LinkedList<>();

void P() {

value--;

if (value < 0) {

// add process to queue

// here p is a process which is currently executing

q.add(p);

block();

}

}

void V() {

value++;

if (value <= 0) {

// remove process p from queue

Process p = q.poll();

wakeup(p);

}

}

void block() {}

void wakeup(Process p) {}

}

from collections import deque

class Process:

pass

class Semaphore:

def __init__(self):

self.value = 0

self.q = deque()

def P(self):

self.value -= 1

if self.value < 0:

# add process to queue

# here p is a process which is currently executing

self.q.append(p)

self.block()

def V(self):

self.value += 1

if self.value <= 0:

# remove process p from queue

p = self.q.popleft()

self.wakeup(p)

def block(self):

pass

def wakeup(self, p):

pass

In this implementation whenever the process waits it is added to a waiting queue of processes associated with that semaphore. This is done through the system call block() on that process. When a process is completed it calls the signal function and one process in the queue is resumed. It uses the wakeup() system call.

Read More about Semaphores

2. Monitors

Monitors are advanced tools provided by operating systems to control access to shared resources. They group shared variables, procedures, and synchronization methods into one unit making sure that only one process can use the monitor's procedures at a time.

Key Features of Monitors

- Automatic Mutual Exclusion: Only one process can access the monitor at a time, eliminating the need for manual locks.

- Condition Variables: Monitors use condition variables with operations like

wait() and signal() to handle process synchronization: - Built-In Synchronization: The operating system ensures that no two processes are inside the monitor simultaneously, simplifying the synchronization process.

Syntax

A condition variable has its own block queue.

- x.wait(): suspends the calling process and places it in x’s queue.

- x.signal(): wakes up one process from x’s queue (if any).

If (x block queue empty)

// Ignore signal

else

// Resume a process from block queue.

Read More about Monitors

3. Sleep-Wakeup

The Sleep and Wakeup mechanism is an operating system-based solution to the critical section problem. It helps processes to avoid busy waiting while waiting for access to shared resources.

How It Works

Sleep

- When a process wants to enter the critical section but finds the resource is already being used by another process, it doesn’t keep checking in a loop.

- Instead, it is put to sleep. This means the process temporarily stops running and frees up CPU resources, allowing other processes to execute.

Wakeup

- Once the resource becomes available (e.g., the current process in the critical section finishes its work), the operating system or another process sends a wakeup signal to the sleeping process.

- The sleeping process then resumes execution and checks if it can now enter the critical section.

Example

Imagine two processes, P1 and P2, want to use a printer (a shared resource):

- If P1 is already using the printer, P2 goes to sleep instead of repeatedly checking if the printer is free.

- When P1 finishes printing, it sends a wakeup signal to P2 letting it know the printer is now available.

Benefits

- Avoids Busy Waiting: Processes don’t waste CPU time checking for resource availability.

- Efficient Resource Usage: The CPU can focus on other processes while one waits.

- Simplifies Synchronization: Processes are paused and resumed automatically based on resource availability.

Explore

GATE Syllabus

GATE CS Tutorials

GATE DA Tutorials

Aptitude

Practice Content