How to Create Load Balancing Servers using Node.js ?

Last Updated :

13 Jun, 2024

In node, Load balancing is a technique used to distribute incoming network traffic across multiple servers to ensure no single server becomes overwhelmed, thus improving responsiveness and availability. In this article, we’ll explore how to create a load-balancing server using Node.js.

Why Load Balancing?

Load balancing is essential for:

- Improved Performance: Distributes workload evenly across multiple servers.

- High Availability: Ensures service continuity in case one server fails.

- Scalability: Allows the application to handle increased traffic by adding more servers.

How to set up load balancing server?

Using Cluster Module

NodeJS has a built-in module called Cluster Module to take advantage of a multi-core system. Using this module you can launch NodeJS instances to each core of your system. Master process listening on a port to accept client requests and distribute across the workers using some intelligent fashion. So, using this module you can utilize the working ability of your system. The following example covers the performance difference by using and without using the Cluster Module.

Without Cluster Module:

Make sure you have installed the express and crypto module using the following command:

npm install express crypto

Example: Implementation to show the example with help of above module.

Node

const { generateKeyPair } = require('crypto');

const app = require('express')();

// API endpoint

// Send public key as a response

app.get('/key', (req, res) => {

generateKeyPair('rsa', {

modulusLength: 2048,

publicKeyEncoding: {

type: 'spki',

format: 'pem'

},

privateKeyEncoding: {

type: 'pkcs8',

format: 'pem',

cipher: 'aes-256-cbc',

passphrase: 'top secret'

}

}, (err, publicKey, privateKey) => {

// Handle errors and use the

// generated key pair.

res.send(publicKey);

})

})

app.listen(3000, err => {

err ?

console.log("Error in server setup") :

console.log('Server listening on PORT 3000')

});

Step to Run Application: Run the application using the following command from the root directory of the project

node index.js

Output: We will see the following output on the terminal screen:

Server listening on PORT 3000

Now open your browser and go to http://localhost:3000/key, you will see the following output:

—–BEGIN PUBLIC KEY—– MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAwAneYp5HlT93Y3ZlPAHjZAnPFvBskQKKfo4an8jskcgEuG85KnZ7/16kQw2Q8/7Ksdm0sIF7qmAUOu0B773X 1BXQ0liWh+ctHIq/C0e9eM1zOsX6vWwX5Y+WH610cpcb50ltmCeyRmD5Qvf+OE/C BqYrQxVRf4q9+029woF84Lk4tK6OXsdU+Gdqo2FSUzqhwwvYZJJXhW6Gt259m0wD YTZlactvfwhe2EHkHAdN8RdLqiJH9kZV47D6sLS9YG6Ai/HneBIjzTtdXQjqi5vF Y+H+ixZGeShypVHVS119Mi+hnHs7SMzY0GmRleOpna58O1RKPGQg49E7Hr0dz8eh 6QIDAQAB —–END PUBLIC KEY—–

The above code listening on port 3000 and send Public Key as a response. Generating an RSA key is CPU-intensive work. Here only one NodeJS instance working in a single core. To see the performance, we have used autocannon tools to test our server as shown below:

The above image showed that the server can respond to 2000 requests when running 500 concurrent connections for 10 seconds. The average request/second is 190.1 seconds.

Using Cluster Module:

Example: Implementation to show with using cluster module.

Node

const express = require('express');

const cluster = require('cluster');

const { generateKeyPair } = require('crypto');

// Check the number of available CPU.

const numCPUs = require('os').cpus().length;

const app = express();

const PORT = 3000;

// For Master process

if (cluster.isMaster) {

console.log(`Master ${process.pid} is running`);

// Fork workers.

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

// This event is first when worker died

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

});

}

// For Worker

else {

// Workers can share any TCP connection

// In this case it is an HTTP server

app.listen(PORT, err => {

err ?

console.log("Error in server setup") :

console.log(`Worker ${process.pid} started`);

});

// API endpoint

// Send public key

app.get('/key', (req, res) => {

generateKeyPair('rsa', {

modulusLength: 2048,

publicKeyEncoding: {

type: 'spki',

format: 'pem'

},

privateKeyEncoding: {

type: 'pkcs8',

format: 'pem',

cipher: 'aes-256-cbc',

passphrase: 'top secret'

}

}, (err, publicKey, privateKey) => {

// Handle errors and use the

// generated key pair.

res.send(publicKey);

})

})

}

Step to Run Application: Run the application using the following command from the root directory of the project

node index.js

Output: We will see the following output on terminal screen:

Master 16916 is running

Worker 6504 started

Worker 14824 started

Worker 20868 started

Worker 12312 started

Worker 9968 started

Worker 16544 started

Worker 8676 started

Worker 11064 started

Now open your browser and go to http://localhost:3000/key, you will see the following output:

—–BEGIN PUBLIC KEY—– MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAzxMQp9y9MblP9dXWuQhf sdlEVnrgmCIyP7CAveYEkI6ua5PJFLRStKHTe3O8rxu+h6I2exXn92F/4RE9Yo8EOnrUCSlqy9bl9qY8D7uBMWir0I65xMZu3rM9Yxi+6gP8H4CMDiJhLoIEap+d9Czr OastDPwI+HF+6nmLkHvuq9X5aORvdiOBwMooIoiRpHbgcHovSerJIfQipGs74IiR 107GbpznSUxMIuwV1fgc6mAULuGZl+Daj0SDxfAjk8KiHyXbfHe5stkPNOCWIsbAtCbGN0bCTR8ZJCLdZ4/VGr+eE0NOvOrElXdXLTDVVzO5dKadoEAtzZzzuQId2P/z JwIDAQAB —–END PUBLIC KEY—–

The above NodeJS application is launched on each core of our system. Where master process accepts the request and distributes across all worker. The performed in this case is shown below:

The above image showed that the server can respond to 5000 requests when running 500 concurrent connections for 10 seconds. The average request/second is 162.06 seconds. So, using the cluster module you can handle more requests. But, sometimes it is not enough, if this is your case then your option is horizontal scaling.

Using Nginx

If your system has more than one application server to respond to, and you need to distribute client requests across all servers then you can smartly use Nginx as a proxy server. Nginx sits on the front of your server pool and distributes requests using some intelligent fashion. In the following example, we have 4 instances of the same NodeJS application on different ports, also you can use another server.

Example: Implementation to show load balancing servers by using nginx.

Node

const app = require('express')();

// API endpoint

app.get('/', (req,res)=>{

res.send("Welcome to GeeksforGeeks !");

})

// Launching application on several ports

app.listen(3000);

app.listen(3001);

app.listen(3002);

app.listen(3003);

Now install Nginx on your machine and create a new file in /etc/nginx/conf.d/ called your-domain.com.conf with the following code in it.

upstream my_http_servers {

# httpServer1 listens to port 3000

server 127.0.0.1:3000;

# httpServer2 listens to port 3001

server 127.0.0.1:3001;

# httpServer3 listens to port 3002

server 127.0.0.1:3002;

# httpServer4 listens to port 3003

server 127.0.0.1:3003;

}

server {

listen 80;

server_name your-domain.com www.your-domain.com;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $http_host;

proxy_pass http://my_http_servers;

}

}Using Express Web Server

There is a lot of advantage to an Express web server. If you are comfortable with NodeJS, you can implement your own Express base load balancer as shown in the following example.

Step 1: Create an empty NodeJS application.

mkdir LoadBalancer

cd LoadBalancer

npm init -y

Step 2: Install required dependencies like ExpressJS, axios, and Concurrently using the following command.

npm i express axios

npm i concurrently -g

Step 3: Create two file config.js for the load balancer server and index.js for the application server.

Example: Implementation to show load balancing servers by using nginx.

Node

const express = require('express');

const path = require('path');

const app = express();

const axios = require('axios');

// Application servers

const servers = [

"http://localhost:3000",

"http://localhost:3001"

]

// Track the current application server to send request

let current = 0;

// Receive new request

// Forward to application server

const handler = async (req, res) =>{

// Destructure following properties from request object

const { method, url, headers, body } = req;

// Select the current server to forward the request

const server = servers[current];

// Update track to select next server

current === (servers.length-1)? current = 0 : current++

try{

// Requesting to underlying application server

const response = await axios({

url: `${server}${url}`,

method: method,

headers: headers,

data: body

});

// Send back the response data

// from application server to client

res.send(response.data)

}

catch(err){

// Send back the error message

res.status(500).send("Server error!")

}

}

// Serve favicon.ico image

app.get('/favicon.ico', (req, res

) => res.sendFile('/favicon.ico'));

// When receive new request

// Pass it to handler method

app.use((req,res)=>{handler(req, res)});

// Listen on PORT 8080

app.listen(8080, err =>{

err ?

console.log("Failed to listen on PORT 8080"):

console.log("Load Balancer Server "

+ "listening on PORT 8080");

});

Here, the filename is index.js

Node

const express = require('express');

const app1 = express();

const app2 = express();

// Handler method

const handler = num => (req,res)=>{

const { method, url, headers, body } = req;

res.send('Response from server ' + num);

}

// Only handle GET and POST requests

// Receive request and pass to handler method

app1.get('*', handler(1)).post('*', handler(1));

app2.get('*', handler(2)).post('*', handler(2));

// Start server on PORT 3000

app1.listen(3000, err =>{

err ?

console.log("Failed to listen on PORT 3000"):

console.log("Application Server listening on PORT 3000");

});

// Start server on PORT 3001

app2.listen(3001, err =>{

err ?

console.log("Failed to listen on PORT 3001"):

console.log("Application Server listening on PORT 3001");

});

Explanation: The above code starts with 2 Express apps, one on port 3000 and another on port 3001. The separate load balancer process should alternate between these two, sending one request to port 3000, the next request to port 3001, and the next one back to port 3000.

Step 4: Open a command prompt on your project folder and run two scripts parallel using concurrently.

concurrently "node config.js" "node index.js"

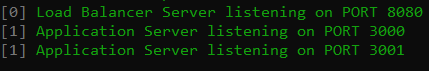

Output:

We will see the following output on the console:

Now, open a browser and go to http://localhost:8080/ and make a few requests, we will see the following output:

Similar Reads

How to Create Load Balancer in GCP using Terraform ?

As traffic controls, load balancers on the Google Cloud Platform (GCP) distribute incoming requests among multiple instances of your application. Consider them as traffic engineers that direct customers to the busiest locations to deliver a dependable and responsive service, especially during period

5 min read

How to Serve Static Content using Node.js ?

Accessing static files are very useful when you want to put your static content accessible to the server for usage. To serve static files such as images, CSS files, and JavaScript files, etc we use the built-in middleware in node.js i.e. express.static. Setting up static middleware: You need to crea

2 min read

How to Create a Chat App Using socket.io in NodeJS?

Socket.io is a JavaScript library that enables real-time, bidirectional, event-based communication between the client and server. It works on top of WebSocket but provides additional features like automatic reconnection, broadcasting, and fallback options. What We Are Going to Create?In this article

5 min read

How to create a simple server using Express JS?

The server plays an important role in the development of the web application. It helps in managing API requests and communication between the client and the backend. ExpressJS is the fast and famous framework of the Node.Js which is used for creating the server. In this article, we will create a sim

3 min read

How to create a simple HTTP server in Node ?

NodeJS is a powerful runtime environment that allows developers to build scalable and high-performance applications, especially for I/O-bound operations. One of the most common uses of NodeJS is to create HTTP servers. What is HTTP?HTTP (Hypertext Transfer Protocol) is a protocol used for transferri

3 min read

Load Balancing in Spring Boot Microservices

Load balancing is an important concept in distributed systems, especially in microservice environments. As enterprises increasingly adopt cloud-native technologies, application models require complex load-balancing strategies to efficiently deliver requests to customers This ensures high availabilit

5 min read

How to Connect to a MongoDB Database Using Node.js

MongoDB is a NoSQL database used to store large amounts of data without any traditional relational database table. To connect to a MongoDB database using NodeJS we use the MongoDB library "mongoose". Steps to Connect to a MongoDB Database Using NodeJSStep 1: Create a NodeJS App: First create a NodeJ

4 min read

How to create different post request using Node.js ?

A POST request is one of the important requests in all HTTP requests. This request is used for storing the data on the WebServer. For Eg File uploading is a common example of a post request. There are many approached to perform an HTTP POST request in Node.js. Various open-source libraries are also

3 min read

How to Create a Load Balancer on GCP?

A load balancer in GCP (Google Cloud Platform) is a service that distributes incoming network traffic across multiple backend resources such as virtual machines (VMs), container instances, and managed instance groups. The main purpose of a load balancer is to improve the availability and scalability

4 min read

Kubernetes - Load Balancing Service

Before learning Kubernetes (or K8S in Short), you should have some knowledge of Docker and Containers. Docker is a tool that helps the developer create containers in which applications can run in an isolated environment. Containers are just an abstraction for the applications inside. Docker also pro

12 min read