PySpark Join Types - Join Two DataFrames

Last Updated :

16 Jun, 2025

In PySpark, joins combine rows from two DataFrames using a common key. Common types include inner, left, right, full outer, left semi and left anti joins. Each type serves a different purpose for handling matched or unmatched data during merges. The syntax is:

dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"type")

where,

- dataframe1 is the first dataframe

- dataframe2 is the second dataframe

- column_name is the column which are matching in both the dataframes type is the join type we have to join

Create the first dataframe for demonstration:

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate() # Init SparkSession

d = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols = ['ID', 'Name', 'Company']

df = spark.createDataFrame(d, cols)

df.show()

Output

Create second dataframe for demonstration:

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate() # Init SparkSession

d1 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols = ['ID', 'salary', 'department']

df1 = spark.createDataFrame(d1, cols)

df1.show()

Output

Inner join

This will join the two PySpark dataframes on key columns, which are common in both dataframes. Syntax:

dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"inner")

Example:

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('join_example').getOrCreate()

# first DataFrame

d1 = [("1", "sravan", "company 1"),

("2", "ojaswi", "company 1"),

("3", "rohith", "company 2"),

("4", "sridevi", "company 1"),

("5", "bobby", "company 1")]

cols1 = ['id', 'name', 'company']

df1 = spark.createDataFrame(d1, cols1)

# second DataFrame

d2 = [("1", "45000", "IT"),

("2", "145000", "Manager"),

("6", "45000", "HR"),

("5", "34000", "Sales")]

cols2 = ['id', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.id == df2.id, "inner").show()

Output

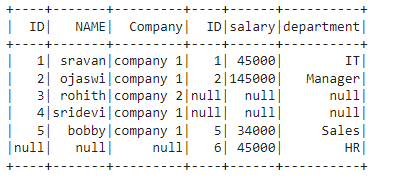

Full Outer Join

This join joins the two dataframes with all matching and non-matching rows, we can perform this join in three ways. Syntax:

- outer: dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"outer")

- full: dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"full")

- fullouter: dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"fullouter")

Example 1: Using outer keyword

In this example, we are going to perform outer join based on the ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# first dataframe data

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, cols1)

# second dataframe data

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.ID == df2.ID, "outer").show()

Output

Example 2: Using full keyword

In this example, we are going to perform outer join using full keyword based on ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# first dataframe data

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, cols1)

# second dataframe data

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.ID == df2.ID, "full").show()

Output

Example 3: Using fullouter keyword

In this example, we are going to perform outer join using full outer based on ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# First dataframe data

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, cols1)

# Second dataframe data

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.ID == df2.ID, "fullouter").show()

Output

Left Join

Here this join joins the dataframe by returning all rows from the first dataframe and only matched rows from the second dataframe with respect to the first dataframe. We can perform this type of join using left and leftouter. Syntax:

- left: dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"left")

- leftouter: dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"leftouter")

In this example, we are going to perform left join using the left keyword based on the ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# First dataframe data

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, cols1)

# Second dataframe data

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.ID == df2.ID, "left").show()

Output

In this example, we are going to perform left join using leftouter keyword based on the ID column in both dataframes

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# first dataframe

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, cols1)

# second dataframe

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.ID == df2.ID, "leftouter").show()

Output

Right Join

Here this join joins the dataframe by returning all rows from the second dataframe and only matched rows from the first dataframe with respect to the second dataframe. We can perform this type of join using right and rightouter. Syntax:

- right: dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"right")

- rightouter: dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"rightouter")

In this example, we are going to perform right join using the right keyword based on ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# Data for left dataframe

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, cols1)

# Data for right dataframe

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.ID == df2.ID, "right").show()

Output

In this example, we are going to perform the right join using rightouter keyword based on the ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# first dataframe data and columns

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

col1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, col1)

# second dataframe data and columns

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

col2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, col2)

df1.join(df2, df1.ID == df2.ID, "rightouter").show()

Output

Leftsemi join

This join will all rows from the first dataframe and return only matched rows from the second dataframe. Syntax:

dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"leftsemi")

Example: In this example, we are going to perform leftsemi join using leftsemi keyword based on the ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# employee data

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols1 = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(d1, cols1)

# salary & department data

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols2 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(d2, cols2)

df1.join(df2, df1.ID == df2.ID, "leftsemi").show()

Output

LeftAnti join

This join returns only columns from the first dataframe for non-matched records of the second dataframe. Syntax:

dataframe1.join(dataframe2,dataframe1.column_name == dataframe2.column_name,"leftanti")

Example: In this example, we are going to perform leftanti join using leftanti keyword based on the ID column in both dataframes.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

col1 = ['ID', 'NAME', 'COMPANY']

df1 = spark.createDataFrame(d1, col1)

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

col2 = ['ID', 'SALARY', 'DEPT']

df2 = spark.createDataFrame(d2, col2)

df1.join(df2, df1.ID == df2.ID, "leftanti").show()

Output:

SQL Expression

We can perform all types of the above joins using an SQL expression, we have to mention the type of join in this expression. To do this, we have to create a temporary view. Syntax:

dataframe.createOrReplaceTempView("name")

where

- dataframe is the input dataframe

- name is the view name

Now we can perform join on these views using spark.sql().Syntax:

spark.sql("select * from dataframe1, dataframe2 where dataframe1.column_name == dataframe2.column_name ")

where,

- dataframe1 is the first view dataframe

- dataframe2 is the second view dataframe

- column_name is the column to be joined

Example 1: In this example, we are going to join two dataframes based on the ID column.

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

d1 = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

col1 = ['ID', 'NAME', 'COMPANY']

df1 = spark.createDataFrame(d1, col1)

d2 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

col2 = ['ID', 'SALARY', 'DEPT']

df2 = spark.createDataFrame(d2, col2)

# Create temp views

df1.createOrReplaceTempView("student")

df2.createOrReplaceTempView("department")

# SQL join on ID

spark.sql("""

SELECT * FROM student

JOIN department

ON student.ID = department.ID

""").show()

Output

We can also perform the above joins using this SQL expression. Syntax:

spark.sql("select * from dataframe1 JOIN_TYPE dataframe2 ON dataframe1.column_name == dataframe2.column_name ")

where, JOIN_TYPE refers to above all types of joins

Python

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('sparkdf').getOrCreate()

# employee data

data = [["1", "sravan", "company 1"],

["2", "ojaswi", "company 1"],

["3", "rohith", "company 2"],

["4", "sridevi", "company 1"],

["5", "bobby", "company 1"]]

cols = ['ID', 'NAME', 'Company']

df1 = spark.createDataFrame(data, cols)

# salary data

data1 = [["1", "45000", "IT"],

["2", "145000", "Manager"],

["6", "45000", "HR"],

["5", "34000", "Sales"]]

cols1 = ['ID', 'salary', 'department']

df2 = spark.createDataFrame(data1, cols1)

# create temp views

df1.createOrReplaceTempView("student")

df2.createOrReplaceTempView("department")

# SQL inner join on ID

spark.sql("SELECT * FROM student INNER JOIN department ON student.ID == department.ID").show()

Output

Similar Reads

Interview Preparation

Practice @Geeksforgeeks

Data Structures

Algorithms

Programming Languages

Web Technologies

Computer Science Subjects

Data Science & ML

Tutorial Library

GATE CS