Stochastic Gradient Descent In R

Last Updated :

23 Jul, 2025

Gradient Descent is an iterative optimization process that searches for an objective function’s optimum value (Minimum/Maximum). It is one of the most used methods for changing a model’s parameters to reduce a cost function in machine learning projects. In this article, we will learn the concept of SGD and its implementation in the R Programming Language.

Introduction to Stochastic Gradient Descent

Stochastic Gradient Descent is an iterative optimization algorithm used to minimize a loss function by adjusting the parameters of a model. Unlike traditional Gradient Descent, which computes the gradient of the entire dataset, SGD updates the model parameters based on a single randomly chosen data point or a small subset of data points. This randomness introduces noise into the optimization process, but it allows SGD to converge more rapidly and handle large datasets more efficiently.

Key Features of SGD

GD is commonly used to update weights and biases during training. The algorithm calculates the gradient of the loss function concerning each parameter and adjusts them in the opposite direction of the gradient to minimize the loss.

- Frequent Updates: Parameters are updated more frequently, allowing faster iteration through the parameter space.

- Reduced Computation per Update: Each update requires less computation, making SGD suitable for large datasets.

- Potential for Faster Convergence: SGD can converge faster than batch gradient descent with appropriate hyperparameter tuning.

Setting Up and Running SGD in R

To implement SGD in R, we need to:

- Set up the Environment and Libraries: Load necessary libraries and set up the environment.

- Define the Model and the Loss Function: Specify the model architecture and choose an appropriate loss function.

- Implement the SGD Algorithm: Develop the SGD algorithm to iteratively update model parameters based on sampled data points.

- Visualize the Results: Plot the model's performance metrics to assess convergence and accuracy.

Implementing Stochastic Gradient Descent (SGD) in R

Implementing Stochastic Gradient Descent (SGD) in R involves a few steps: defining a model, choosing a loss function, and updating the model parameters iteratively based on randomly sampled data points. In this example, we will see a simple linear regression problem and demonstrate how to apply SGD to optimize the model parameters.

Step 1: Define the Model

We start by defining the linear model in R.

R

# Define the linear model: y = mx + c

linear_model <- function(x, m, c) {

return(m * x + c)

}

Step 2: Choose the Loss Function

For linear regression, a common loss function is the Mean Squared Error (MSE), which measures the average squared difference between the predicted and actual values. We define the MSE function as follows:

R

# Mean Squared Error (MSE) loss function

mse_loss <- function(y_pred, y_actual) {

return(mean((y_pred - y_actual)^2))

}

Step 3: Implement Stochastic Gradient Descent

Now, let's implement the Stochastic Gradient Descent algorithm to optimize the model parameters m and c:

R

# Stochastic Gradient Descent (SGD) algorithm

sgd <- function(x, y, m_init, c_init, learning_rate, epochs) {

m <- m_init

c <- c_init

n <- length(x)

for (epoch in 1:epochs) {

for (i in 1:n) {

# Randomly sample a data point

index <- sample(1:n, 1)

x_i <- x[index]

y_i <- y[index]

# Compute the gradient of the loss function

y_pred <- linear_model(x_i, m, c)

grad_m <- -2 * x_i * (y_i - y_pred)

grad_c <- -2 * (y_i - y_pred)

# Update model parameters

m <- m - learning_rate * grad_m

c <- c - learning_rate * grad_c

}

}

return(list("slope" = m, "intercept" = c))

}

Step 4: Generate Synthetic Data and Apply SGD

Let's generate some synthetic data and use SGD to fit the linear model:

R

# Generate synthetic data

set.seed(123)

x <- 1:100

y <- 2 * x + 5 + rnorm(100, sd = 20)

# Initialize model parameters and hyperparameters

m_init <- 0

c_init <- 0

learning_rate <- 0.0001

epochs <- 1000

# Apply SGD to optimize the model parameters

model <- sgd(x, y, m_init, c_init, learning_rate, epochs)

# Print the optimized model parameters

print(model)

Output:

$slope

[1] 2.234376

$intercept

[1] 4.459138

This example demonstrates how to implement Stochastic Gradient Descent in R for a simple linear regression problem. By iteratively updating the model parameters based on randomly sampled data points, SGD efficiently optimizes the model to fit the given dataset.

Step 5: Visualizing the Results

To visualize how well the model fits the data, we can plot the original data points and the fitted regression line:

R

#Load ggplot2 library

library(ggplot2)

# Predicted values based on the optimized model

y_pred <- linear_model(x, model$slope, model$intercept)

# Plot the original data and the fitted line

data <- data.frame(x = x, y = y, y_pred = y_pred)

ggplot(data, aes(x = x, y = y)) +

geom_point(color = 'blue', alpha = 0.5) +

geom_line(aes(y = y_pred), color = 'red') +

labs(title = "Linear Regression with SGD",

x = "x",

y = "y") +

theme_minimal()

Output:

Stochastic Gradient Descent In R

Stochastic Gradient Descent In RUnderstanding Key Hyperparameters

SGD's performance and convergence heavily depend on the choice of its hyperparameters. Let us learn the roles of learning rate, batch size, and epochs, and provide some recommendations for their optimal values.

1. Learning Rate

The learning rate controls the size of the steps taken towards the minimum of the loss function. It’s crucial to set an appropriate learning rate:

- Too High: May cause the algorithm to diverge or overshoot the optimal solution.

- Too Low: Results in slow convergence and may get stuck in local minima.

- Recommendation: Start with a small learning rate (e.g., 0.01) and adjust based on the observed convergence behavior.

2. Batch Size

The batch size determines the number of data points used to compute the gradient in each update.

- Stochastic (Batch Size = 1): Each update uses a single data point, introducing more noise but faster updates.

- Mini-Batch: Uses a small subset of data, balancing noise and computational efficiency.

- Full Batch: Uses the entire dataset, reducing noise but increasing computation time per update.

- Recommendation: Mini-batch sizes (e.g., 32 or 64) are commonly used for a good balance between noise and efficiency.

3. Epochs

An epoch is one complete pass through the entire dataset. The number of epochs determines how many times the algorithm will iterate over the data.

- Too Few: The model may underfit the data.

- Too Many: Can lead to overfitting, where the model learns the noise in the data.

- Recommendation: Start with 100-500 epochs and monitor the performance. Use early stopping if the model stops improving.

Let’s consider a dataset where we want to perform Hyperparameters on Stochastic Gradient Descent In R.

Step 1: Load Necessary Libraries and Generate Synthetic Data

First we will install and load the required package and data.

R

# Load necessary libraries (if not already installed)

if (!requireNamespace("ggplot2", quietly = TRUE)) install.packages("ggplot2")

library(ggplot2)

# Set seed for reproducibility

set.seed(42)

# Generate synthetic data

n <- 100

x1 <- runif(n, min = -10, max = 10)

x2 <- runif(n, min = -10, max = 10)

y <- ifelse(x1 + x2 + rnorm(n) > 0, 1, 0)

# Create a data frame

data <- data.frame(x1 = x1, x2 = x2, y = y)

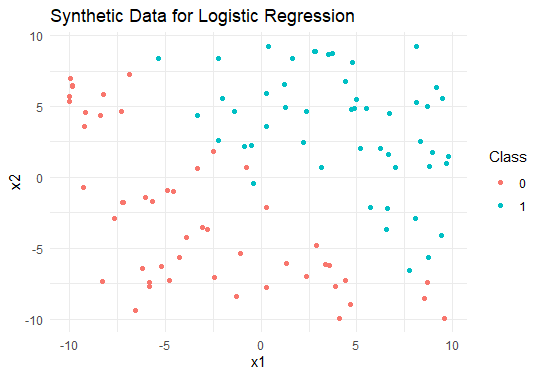

ggplot2 is loaded for data visualization. Synthetic data is generated: x1 and x2 are random numbers, and y is generated based on whether x1 + x2 + rnorm() is greater than 0, assigning 1 for true and 0 for false.

Step 2: Visualize the Data

Now we will visualize the data that we create.

R

# Plot the data

ggplot(data, aes(x = x1, y = x2, color = factor(y))) +

geom_point() +

labs(title = "Synthetic Data for Logistic Regression",

x = "x1",

y = "x2",

color = "Class") +

theme_minimal()

Output:

Synthetic Data for Logistic Regression

Synthetic Data for Logistic RegressionStep 3: Define the Logistic Function

Now we will define the Logistic Function for our model.

R

# Logistic function

logistic <- function(z) {

return(1 / (1 + exp(-z)))

}

The logistic function is defined here, which converts input z into a value between 0 and 1.

Step 4: Compute Loss and Gradient

Now we will Compute Loss and Gradient.

R

# Compute the loss and gradient

compute_loss_and_gradient <- function(x1, x2, y, w, b) {

z <- w[1] * x1 + w[2] * x2 + b

predictions <- logistic(z)

error <- predictions - y

# Gradients

grad_w1 <- mean(error * x1)

grad_w2 <- mean(error * x2)

grad_b <- mean(error)

return(list(grad_w1 = grad_w1, grad_w2 = grad_w2, grad_b = grad_b))

}

This function computes the loss (difference between predictions and actual values) and gradients (partial derivatives of the loss function with respect to weights w and bias b).

Step 5: Implement SGD for Logistic Regression

At last we will Implement SGD for Logistic Regression in R.

R

# SGD for logistic regression

sgd_logistic <- function(data, learning_rate, epochs) {

w <- c(0, 0) # Initial weights

b <- 0 # Initial bias

for (epoch in 1:epochs) {

for (i in 1:nrow(data)) {

index <- sample(1:nrow(data), 1)

x1 <- data$x1[index]

x2 <- data$x2[index]

y <- data$y[index]

gradients <- compute_loss_and_gradient(x1, x2, y, w, b)

# Update weights and bias using gradients and learning rate

w[1] <- w[1] - learning_rate * gradients$grad_w1

w[2] <- w[2] - learning_rate * gradients$grad_w2

b <- b - learning_rate * gradients$grad_b

}

}

return(list(weights = w, bias = b))

}

This function performs stochastic gradient descent:

- Randomly samples data points.

- Computes gradients using compute_loss_and_gradient.

- Updates weights w and bias b iteratively based on gradients and a specified learning rate.

Step 6: Train the Model

Now we will train our model.

R

# Train the logistic regression model

learning_rate <- 0.01

epochs <- 1000

model <- sgd_logistic(data, learning_rate, epochs)

# Print the optimized model parameters

print(model)

Output:

$weights

[1] 1.850376 1.841270

$bias

[1] -0.8065149

Step 7: Visualize the Decision Boundary

We will Visualize the Decision Boundary of our model.

R

# Visualize the decision boundary

decision_boundary <- function(x1) {

-(model$weights[1] * x1 + model$bias) / model$weights[2]

}

ggplot(data, aes(x = x1, y = x2, color = factor(y))) +

geom_point() +

geom_abline(intercept = -model$bias / model$weights[2],

slope = -model$weights[1] / model$weights[2],

color = "red", linetype = "dashed") +

labs(title = "Logistic Regression with SGD",

x = "x1",

y = "x2",

color = "Class") +

theme_minimal()

Output:

Stochastic Gradient Descent In R

Stochastic Gradient Descent In RThe output is showing the optimized weights and bias of the logistic regression model and visualize the decision boundary separating the two classes in the plot.

Advantages of the Stochastic Gradient Descent Method

Stochastic Gradient Descent (SGD) offers several advantages over traditional optimization methods, particularly in the context of large-scale machine learning and deep learning tasks.

- Efficiency in handling large datasets

- Faster convergence due to the use of mini-batches

- Ability to escape local minima

- Handling Non-Convex Loss Functions

- Memory Efficiency

Problems with the Stochastic Gradient Descent Method

While Stochastic Gradient Descent (SGD) offers several advantages, it also has some inherent limitations and challenges that practitioners need to be aware of.

- Sensitivity to Learning Rate Selection

- Noisy Updates and Fluctuations in Loss Function

- Potential Divergence with High Learning Rates

- Lack of Global Convergence Guarantee

- Difficulty in Reproducing Results

Conclusion

Stochastic Gradient Descent is a powerful optimization algorithm widely used in machine learning and deep learning applications. In this article, we discussed the concept of SGD, its implementation in R with examples, and its advantages and drawbacks. By understanding how SGD works and its implications, practitioners can effectively apply it to train models and improve performance.

Similar Reads

ML - Stochastic Gradient Descent (SGD) Stochastic Gradient Descent (SGD) is an optimization algorithm in machine learning, particularly when dealing with large datasets. It is a variant of the traditional gradient descent algorithm but offers several advantages in terms of efficiency and scalability, making it the go-to method for many d

8 min read

Stochastic Gradient Descent Regressor A key method in data science and machine learning is the stochastic gradient descent (SGD) regression. It is essential to many regression activities and aids in the creation of predictive models for a variety of uses. We will study the idea of the SGD Regressor, its operation, and its importance in

10 min read

What is Gradient descent? Gradient Descent is a fundamental algorithm in machine learning and optimization. It is used for tasks like training neural networks, fitting regression lines, and minimizing cost functions in models. In this article we will understand what gradient descent is, how it works , mathematics behind it a

8 min read

Stochastic Gradient Descent Classifier One essential tool in the data science and machine learning toolkit for a variety of classification tasks is the stochastic gradient descent (SGD) classifier. Through an exploration of its functionality and critical role in data-driven decision-making, we set out to explore the complexities of the S

14 min read

Gradient Descent in Linear Regression Gradient descent is a optimization algorithm used in linear regression to find the best fit line tohe data. It works by gradually by adjusting the line’s slope and intercept to reduce the difference between actual and predicted values. This process helps the model make accurate predictions by minimi

4 min read

Vectorization Of Gradient Descent In Machine Learning, Regression problems can be solved in the following ways: 1. Using Optimization Algorithms - Gradient Descent Batch Gradient Descent.Stochastic Gradient Descent.Mini-Batch Gradient DescentOther Advanced Optimization Algorithms like ( Conjugate Descent ... ) 2. Using the Normal Eq

5 min read