Thread in Operating System

Last Updated :

08 Sep, 2025

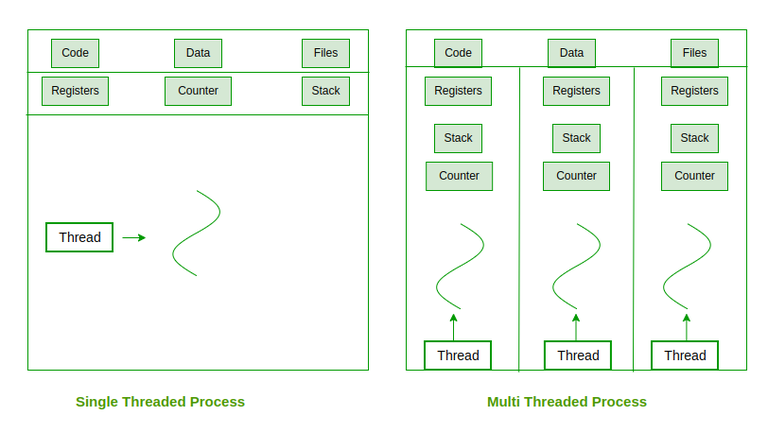

A thread is a single sequence stream within a process. Threads are also called lightweight processes as they possess some of the properties of processes. Each thread belongs to exactly one process.

- In an operating system that supports multithreading, a process can consist of many threads.

- All threads belonging to the same process share code section, data section, and OS resources (e.g. open files and signals), but each thread has its own (thread control block) - thread ID, program counter, register set, and a stack.

Why Do We Need Threads (and Their Benefits)

Threads are needed in modern operating systems and applications because they:

- Improve Application Performance: Threads can run in parallel, making programs execute faster.

- Increase Responsiveness: Even if one thread is busy, another can return results or handle user actions immediately.

- Enable Concurrency: Multiple things can happen at once, such as background saving, formatting, and user input in Microsoft Word or Google Docs.

- Simplify Communication: Since threads share the same memory space, they can directly exchange data without special inter-process communication mechanisms.

- Support Prioritization: Like processes, threads can have priorities; the highest-priority thread gets scheduled first.

- Efficient Context Switching: Switching between threads takes less time than switching between processes because threads use the same address space.

- Better Multiprocessor Utilization: Threads from the same process can run on different processors simultaneously, speeding up execution.

- Resource Sharing: Threads within a process share code, data, and files, which saves resources.

- Higher Throughput: Dividing a process into multiple threads allows more jobs to finish per unit time.

- Synchronization Support: Since threads share resources, synchronization tools (locks, semaphores, etc.) ensure safe access to shared data.

- Thread Management: Each thread has a Thread Control Block (TCB) that stores its state, register values, and scheduling info for context switching.

Components of Threads

These are the basic components of the Operating System.

- Stack Space: Stores local variables, function calls, and return addresses specific to the thread.

- Register Set: Hold temporary data and intermediate results for the thread's execution.

- Program Counter: Tracks the current instruction being executed by the thread.

Types of Thread in Operating System

Threads are of two types. These are described below.

- User Level Thread

- Kernel Level Thread

Threads

ThreadsUser-Level Threads (ULTs)

- Managed entirely in user space using a thread library; the kernel is unaware of them.

- Switching between ULTs is fast since only program counter, registers, and stack need to be saved/restored.

- Do not require system calls for creation or management, making them lightweight.

- Blocking Limitation: If one thread makes a blocking system call, the entire process (all threads) is blocked.

- Scheduling is done by the application itself, which may not be as efficient as kernel-level scheduling.

- Cannot fully utilize multiprocessor systems because the kernel schedules processes, not individual user-level threads.

Kernel-Level Threads (KLTs)

- Managed directly by the operating system kernel; each thread has an entry in the kernel’s thread table.

- The kernel schedules each thread independently, allowing true parallel execution on multiple CPUs/cores.

- Handles blocking system calls efficiently; if one thread blocks, the kernel can run another thread from the same process.

- Provides better load balancing across processors since the kernel controls all threads.

- Context switching is slower compared to ULTs because it requires switching between user mode and kernel mode.

- Implementation is more complex and requires frequent interaction with the kernel.

- Large numbers of threads may add extra load on the kernel scheduler, potentially affecting performance.

For more, refer to the Thread and its types

Difference Between Process and Thread

The primary difference is that threads within the same process run in a shared memory space, while processes run in separate memory spaces. Threads are not independent of one another like processes are, and as a result, threads share with other threads their code section, data section, and OS resources (like open files and signals). But, like a process, a thread has its own program counter (PC), register set, and stack space.

For more, refer to Difference Between Process and Thread.

Explore

OS Basics

Process Management

Memory Management

I/O Management

Important Links