The growth of digital platforms has led to an explosion of data. Our aim in Orfium is to effectively process this ever-increasing volume of information. We achieve this by building and deploying cloud services designed to accurately track how music is used, how music rights are managed, and ensuring rightful payments to artists and rights Holders.

In this talk we are going to explore techniques that bring our AI models to production, building and maintaining our services and overcoming cost and scale barriers.

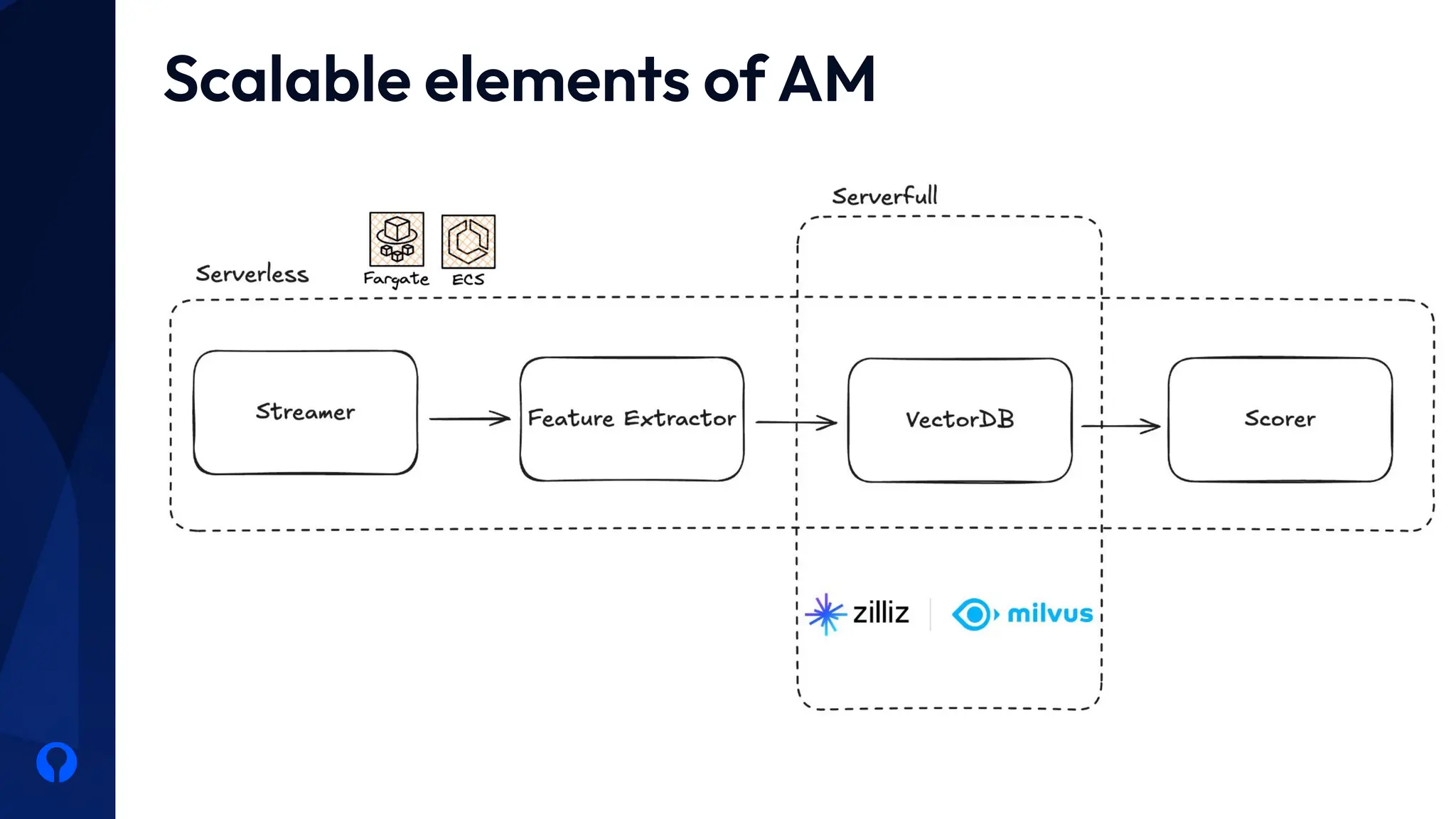

Attendees can anticipate a brief overview of our services, the tools that we are using to bring our models to production, the cloud architecture that makes all this possible and a deeper dive on how technologies like vectorDBs enabled us to reach the scale we have today without breaking the bank.