Apache Spark Streaming: Architecture and Fault Tolerance

- 1. © 2015 IBM Corporation Apache Hadoop Day 2015 Paranth Thiruvengadam – Architect @ IBM Sachin Aggarwal – Developer @ IBM

- 2. © 2015 IBM Corporation Spark Streaming Features of Spark Streaming High Level API (joins, windows etc.) Fault – Tolerant (exactly once semantics achievable) Deep Integration with Spark Ecosystem (MLlib, SQL, GraphX etc.) Apache Hadoop Day 2015

- 3. © 2015 IBM Corporation Architecture Apache Hadoop Day 2015

- 4. © 2015 IBM Corporation High Level Overview Apache Hadoop Day 2015

- 5. © 2015 IBM Corporation Receiving Data Driver RECEIVER Input Source Executor Executor Data Blocks Data Blocks Data Blocks Are replicated To another Executor Driver runs Receiver as Long running tasks Receiver divides Streams into Blocks and keeps in memory Apache Hadoop Day 2015

- 6. © 2015 IBM Corporation Processing Data Driver RECEIVER Executor Executor Data Blocks Data Blocks Every batch Internal Driver Launches tasks To process the blocks Data Store results results

- 7. © 2015 IBM Corporation What’s different from other Streaming applications?

- 8. © 2015 IBM Corporation Traditional Stream Processing

- 9. © 2015 IBM Corporation Load Balancing…

- 10. © 2015 IBM Corporation Node failure / Stragglers…

- 11. © 2015 IBM Corporation Word Count with Kafka

- 12. © 2015 IBM Corporation Fault Tolerance

- 13. © 2015 IBM Corporation Fault Tolerance Why Care? Different guarantees for Data Loss Atleast Once Exactly Once What all can fail? Driver Executor

- 14. © 2015 IBM Corporation What happens when executor fails?

- 15. © 2015 IBM Corporation What happens when Driver fails?

- 16. © 2015 IBM Corporation Recovering Driver – Checkpointing

- 17. © 2015 IBM Corporation Driver restart

- 18. © 2015 IBM Corporation Driver restart – ToDO List Configure automatic driver restart Spark Standalone YARN Set Checkpoint in HDFS compatible file system streamingContext.checkpiont(hdfsDirectory) Ensure the Code uses checkpoints for recovery Def setupStreamingContext() : StreamingContext = { Val context = new StreamingContext(…) Val lines = KafkaUtils.createStream(…) … Context.checkpoint(hdfsDir) Val context = StreamingContext.getOrCreate(hdfsDir, setupStreamingContext) Context.start()

- 19. © 2015 IBM Corporation WAL for no data loss

- 20. © 2015 IBM Corporation Recover using WAL

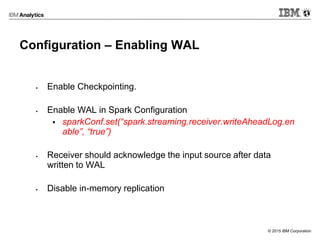

- 21. © 2015 IBM Corporation Configuration – Enabling WAL Enable Checkpointing. Enable WAL in Spark Configuration sparkConf.set(“spark.streaming.receiver.writeAheadLog.en able”, “true”) Receiver should acknowledge the input source after data written to WAL Disable in-memory replication

- 22. © 2015 IBM Corporation Normal Processing

- 23. © 2015 IBM Corporation Restarting Failed Driver

- 24. © 2015 IBM Corporation Fault-Tolerant Semantics Exactly Once, If Outputs are Idempotent or transactional Exactly Once, as long as received data is not lost Aleast Once, with Checkpointing / WAL Source Receiving Transforming Outputting Sink

- 25. © 2015 IBM Corporation Fault-Tolerant Semantics Exactly Once, If Outputs are Idempotent or transactional Exactly Once, as long as received data is not lost Exactly Once, with Kafka Direct API Source Receiving Transforming Outputting Sink

- 26. © 2015 IBM Corporation How to achieve “exactly once” guarantee?

- 27. © 2015 IBM Corporation Before Kafka Direct API

- 28. © 2015 IBM Corporation Kafka Direct API • Simplified Parallelism • Less Storage Need • Exactly Once Semantics Benefits of this approach

- 29. © 2015 IBM Corporation Demo

- 30. D E M O SPARK STREAMING

- 31. OVERVIEW OF SPARK STREAMING

- 32. DISCRETIZED STREAMS (DSTREAMS) • Dstream is basic abstraction in Spark Streaming. • It is represented by a continuous series of RDDs(of the same type). • Each RDD in a DStream contains data from a certain interval • DStreams can either be created from live data (such as, data from TCP sockets, Kafka, Flume, etc.) using a Streaming Context or it can be generated by transforming existing DStreams using operations such as `map`, `window` and `reduceByKeyAndWindow`.

- 34. WORD COUNT val sparkConf = new SparkConf() .setMaster("local[2]”) .setAppName("WordCount") val sc = new SparkContext( sparkConf) val file = sc.textFile(“filePath”) val words = file .flatMap(_.split(" ")) Val pairs = words .map(x => (x, 1)) val wordCounts =pairs .reduceByKey(_ + _) wordCounts.saveAsTextFile(args(1)) val conf = new SparkConf() .setMaster("local[2]") .setAppName("SocketStreaming") val ssc = new StreamingContext( conf, Seconds(2)) val lines = ssc .socketTextStream("localhost", 9998) val words = lines .flatMap(_.split(" ")) val pairs = words .map(word => (word, 1)) val wordCounts = pairs .reduceByKey(_ + _) wordCounts.print() ssc.start() ssc.awaitTermination()

- 35. DEMO

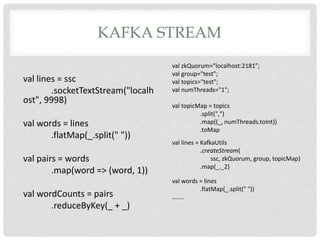

- 36. KAFKA STREAM val lines = ssc .socketTextStream("localh ost", 9998) val words = lines .flatMap(_.split(" ")) val pairs = words .map(word => (word, 1)) val wordCounts = pairs .reduceByKey(_ + _) val zkQuorum="localhost:2181”; val group="test"; val topics="test"; val numThreads="1"; val topicMap = topics .split(",") .map((_, numThreads.toInt)) .toMap val lines = KafkaUtils .createStream( ssc, zkQuorum, group, topicMap) .map(_._2) val words = lines .flatMap(_.split(" ")) ……..

- 37. DEMO

- 38. OPERATIONS • Repartition • Operation on RDD (Example print partition count of each RDD) Val re_lines=lines .repartition(5) re_lines .foreachRDD(x =>fun(x)) def fun (rdd:RDD[String]) ={ print("partition count” + rdd.partitions.length) }

- 39. DEMO

- 40. STATELESS TRANSFORMATIONS • map() Apply a function to each element in the DStream and return a DStream of the result. • ds.map(x => x + 1) • flatMap() Apply a function to each element in the DStream and return a DStream of the contents of the iterators returned. • ds.flatMap(x => x.split(" ")) • filter() Return a DStream consisting of only elements that pass the condition passed to filter. • ds.filter(x => x != 1) • repartition() Change the number of partitions of the DStream. • ds.repartition(10) • reduceBy Combine values with the same Key() key in each batch. • ds.reduceByKey( (x,y)=>x+y) • groupBy Group values with the same Key() key in each batch. • ds.groupByKey()

- 41. DEMO

- 42. STATEFUL TRANSFORMATIONS Stateful transformations require checkpointing to be enabled in your StreamingContext for fault tolerance • Windowed transformations: windowed computations allow you to apply transformations over a sliding window of data • UpdateStateByKey transformation: Enables this by providing access to a state variable for DStreams of key/value pairs

- 43. DEMO

- 44. WINDOW OPERATIONS This shows that any window operation needs to specify two parameters. • window length - The duration of the window. • sliding interval - The interval at which the window operation is performed. These two parameters must be multiples of the batch interval of the source Dstream

- 45. DEMO

- 46. WINDOWED TRANSFORMATIONS • window(windowLength, slideInterval) • Return a new Dstream, computed based on windowed batches of the source Dstream. • countByWindow(windowLength, slideInterval) • Return a sliding window count of elements in the stream. • val totalWordCount= words.countByWindow(Seconds(30), Seconds(10)) • reduceByWindow(func, windowLength, slideInterval) • Return a new single-element stream, created by aggregating elements in the stream over a sliding interval using func. • The function should be associative so that it can be computed correctly in parallel. • val totalWordCount= pairs.reduceByWindow({(x, y) => x + y},{(x, y) => x – y} Seconds(10) • reduceByKeyAndWindow(func, windowLength, slideInterval, [numTasks]) • Returns a new DStream of (K, V) pairs where the values for each key are aggregated using the given reduce function func over batches in a sliding window • val windowedWordCounts = pairs.reduceByKeyAndWindow((a:Int,b:Int) => (a + b), Seconds(30), Seconds(10)) • countByValueAndWindow(windowLength, slideInterval) • Returns a new DStream of (K, Long) pairs where the value of each key is its frequency within a sliding window. • val EachWordCount= word.countByValueAndWindow(Seconds(30), Seconds(10))

- 47. DEMO

- 48. UPDATE STATE BY KEY TRANSFORMATION • updateStateByKey() • Enables this by providing access to a state variable for DStreams of key/value pairs • User provide a function updateFunc(events, oldState) and initialRDD • val initialRDD = ssc.sparkContext.parallelize(List(("hello", 1), ("world", 1))) • val updateFunc = (values: Seq[Int], state: Option[Int]) => { val currentCount = values.foldLeft(0)(_ + _) val previousCount = state.getOrElse(0) Some(currentCount + previousCount) } • val stateCount= pairs.updateStateByKey[Int](updateFunc)

- 49. DEMO

- 50. TRANSFORM OPERATION • The transform operation allows arbitrary RDD-to-RDD functions to be applied on a DStream. • It can be used to apply any RDD operation that is not exposed in the DStream API. • For example, the functionality of joining every batch in a data stream with another dataset is not directly exposed in the DStream API. • val cleanedDStream = wordCounts.transform(rdd => { rdd.join(data) })

- 51. DEMO

- 52. JOIN OPERATIONS • Stream-stream joins: • Streams can be very easily joined with other streams. • val stream1: DStream[String, String] = ... • val stream2: DStream[String, String] = ... • val joinedStream = stream1.join(stream2) • Windowed join • val windowedStream1 = stream1.window(Seconds(20)) • val windowedStream2 = stream2.window(Minutes(1)) • val joinedStream = windowedStream1.join(windowedStream2) • Stream-dataset joins • val dataset: RDD[String, String] = ... • val windowedStream = stream.window(Seconds(20))... • val joinedStream = windowedStream.transform { rdd => rdd.join(dataset) }

- 53. DEMO

- 54. USING FOREACHRDD() • foreachRDD is a powerful primitive that allows data to be sent out to external systems. • dstream.foreachRDD { rdd => rdd.foreachPartition { partitionOfRecords => val connection = ConnectionPool.getConnection() partitionOfRecords.foreach(record => connection.send(record)) ConnectionPool.returnConnection(connection) } } • Using foreachRDD, Each RDD is converted to a DataFrame, registered as a temporary table and then queried using SQL. • words.foreachRDD { rdd => val sqlContext = SQLContext.getOrCreate(rdd.sparkContext) import sqlContext.implicits._ val wordsDataFrame = rdd.toDF("word") wordsDataFrame.registerTempTable("words") val wordCountsDataFrame = sqlContext.sql("select word, count(*) as total from words group by word") wordCountsDataFrame.show() }

- 55. DEMO

- 56. DSTREAMS (SPARK CODE) • DStreams internally is characterized by a few basic properties: • A list of other DStreams that the DStream depends on • A time interval at which the DStream generates an RDD • A function that is used to generate an RDD after each time interval • Methods that should be implemented by subclasses of Dstream • Time interval after which the DStream generates a RDD • def slideDuration: Duration • List of parent DStreams on which this DStream depends on • def dependencies: List[DStream[_]] • Method that generates a RDD for the given time • def compute(validTime: Time): Option[RDD[T]] • This class contains the basic operations available on all DStreams, such as `map`, `filter` and `window`. In addition, PairDStreamFunctions contains operations available only on DStreams of key-value pairs, such as `groupByKeyAndWindow` and `join`. These operations are automatically available on any DStream of pairs (e.g., DStream[(Int, Int)] through implicit conversions.

- 57. © 2015 IBM Corporation

Editor's Notes

- #5: Continuous operator processing model. Each node continuously receives records, updates internal state, and emits new records. The latency is low but Fault tolerance is typically achieved through replication, using a synchronization protocol like Flux. D-Stream processing model. In each time interval, the records that arrive are stored reliably across the cluster to form an immutable, partitioned dataset. This is then processed via deterministic parallel operations to compute other distributed datasets that represent program output or state to pass to the next interval. Each series of datasets forms one D-Stream

- #9: Continuous operator processing model. Each node continuously receives records, updates internal state, and emits new records. The latency is low but Fault tolerance is typically achieved through replication, using a synchronization protocol like Flux. D-Stream processing model. In each time interval, the records that arrive are stored reliably across the cluster to form an immutable, partitioned dataset. This is then processed via deterministic parallel operations to compute other distributed datasets that represent program output or state to pass to the next interval. Each series of datasets forms one D-Stream

- #10: Continuous operator processing model. Each node continuously receives records, updates internal state, and emits new records. The latency is low but Fault tolerance is typically achieved through replication, using a synchronization protocol like Flux. D-Stream processing model. In each time interval, the records that arrive are stored reliably across the cluster to form an immutable, partitioned dataset. This is then processed via deterministic parallel operations to compute other distributed datasets that represent program output or state to pass to the next interval. Each series of datasets forms one D-Stream

- #11: Continuous operator processing model. Each node continuously receives records, updates internal state, and emits new records. The latency is low but Fault tolerance is typically achieved through replication, using a synchronization protocol like Flux. D-Stream processing model. In each time interval, the records that arrive are stored reliably across the cluster to form an immutable, partitioned dataset. This is then processed via deterministic parallel operations to compute other distributed datasets that represent program output or state to pass to the next interval. Each series of datasets forms one D-Stream

- #19: Have to have a sample code before coming to this slide.

- #23: reference ids of the blocks for locating their data in the executor memory, (ii) offset information of the block data in the logs

- #26: Have to read on Kafka Direct API.

![WORD COUNT

val sparkConf = new SparkConf()

.setMaster("local[2]”)

.setAppName("WordCount")

val sc = new SparkContext(

sparkConf)

val file = sc.textFile(“filePath”)

val words = file

.flatMap(_.split(" "))

Val pairs = words

.map(x => (x, 1))

val wordCounts =pairs

.reduceByKey(_ + _)

wordCounts.saveAsTextFile(args(1))

val conf = new SparkConf()

.setMaster("local[2]")

.setAppName("SocketStreaming")

val ssc = new StreamingContext(

conf, Seconds(2))

val lines = ssc

.socketTextStream("localhost", 9998)

val words = lines

.flatMap(_.split(" "))

val pairs = words

.map(word => (word, 1))

val wordCounts = pairs

.reduceByKey(_ + _)

wordCounts.print()

ssc.start()

ssc.awaitTermination()](https://image.slidesharecdn.com/sparkstreaming-new-160130060521/85/Apache-Spark-Streaming-Architecture-and-Fault-Tolerance-34-320.jpg)

![OPERATIONS

• Repartition

• Operation on RDD

(Example print partition count

of each RDD)

Val re_lines=lines

.repartition(5)

re_lines

.foreachRDD(x =>fun(x))

def fun (rdd:RDD[String]) ={

print("partition count”

+ rdd.partitions.length)

}](https://image.slidesharecdn.com/sparkstreaming-new-160130060521/85/Apache-Spark-Streaming-Architecture-and-Fault-Tolerance-38-320.jpg)

![WINDOWED TRANSFORMATIONS

• window(windowLength, slideInterval)

• Return a new Dstream, computed based on windowed batches of the source Dstream.

• countByWindow(windowLength, slideInterval)

• Return a sliding window count of elements in the stream.

• val totalWordCount= words.countByWindow(Seconds(30), Seconds(10))

• reduceByWindow(func, windowLength, slideInterval)

• Return a new single-element stream, created by aggregating elements in the stream over a sliding

interval using func.

• The function should be associative so that it can be computed correctly in parallel.

• val totalWordCount= pairs.reduceByWindow({(x, y) => x + y},{(x, y) => x – y} Seconds(10)

• reduceByKeyAndWindow(func, windowLength, slideInterval, [numTasks])

• Returns a new DStream of (K, V) pairs where the values for each key are aggregated using the

given reduce function func over batches in a sliding window

• val windowedWordCounts = pairs.reduceByKeyAndWindow((a:Int,b:Int) => (a + b), Seconds(30),

Seconds(10))

• countByValueAndWindow(windowLength, slideInterval)

• Returns a new DStream of (K, Long) pairs where the value of each key is its frequency within a

sliding window.

• val EachWordCount= word.countByValueAndWindow(Seconds(30), Seconds(10))](https://image.slidesharecdn.com/sparkstreaming-new-160130060521/85/Apache-Spark-Streaming-Architecture-and-Fault-Tolerance-46-320.jpg)

![UPDATE STATE BY KEY

TRANSFORMATION

• updateStateByKey()

• Enables this by providing access to a state variable for DStreams of

key/value pairs

• User provide a function updateFunc(events, oldState) and initialRDD

• val initialRDD = ssc.sparkContext.parallelize(List(("hello", 1),

("world", 1)))

• val updateFunc = (values: Seq[Int], state: Option[Int]) => {

val currentCount = values.foldLeft(0)(_ + _)

val previousCount = state.getOrElse(0)

Some(currentCount + previousCount)

}

• val stateCount= pairs.updateStateByKey[Int](updateFunc)](https://image.slidesharecdn.com/sparkstreaming-new-160130060521/85/Apache-Spark-Streaming-Architecture-and-Fault-Tolerance-48-320.jpg)

![JOIN OPERATIONS

• Stream-stream joins:

• Streams can be very easily joined with other streams.

• val stream1: DStream[String, String] = ...

• val stream2: DStream[String, String] = ...

• val joinedStream = stream1.join(stream2)

• Windowed join

• val windowedStream1 = stream1.window(Seconds(20))

• val windowedStream2 = stream2.window(Minutes(1))

• val joinedStream = windowedStream1.join(windowedStream2)

• Stream-dataset joins

• val dataset: RDD[String, String] = ...

• val windowedStream = stream.window(Seconds(20))...

• val joinedStream = windowedStream.transform { rdd => rdd.join(dataset) }](https://image.slidesharecdn.com/sparkstreaming-new-160130060521/85/Apache-Spark-Streaming-Architecture-and-Fault-Tolerance-52-320.jpg)

![DSTREAMS (SPARK CODE)

• DStreams internally is characterized by a few basic properties:

• A list of other DStreams that the DStream depends on

• A time interval at which the DStream generates an RDD

• A function that is used to generate an RDD after each time interval

• Methods that should be implemented by subclasses of Dstream

• Time interval after which the DStream generates a RDD

• def slideDuration: Duration

• List of parent DStreams on which this DStream depends on

• def dependencies: List[DStream[_]]

• Method that generates a RDD for the given time

• def compute(validTime: Time): Option[RDD[T]]

• This class contains the basic operations available on all DStreams, such as

`map`, `filter` and `window`. In addition, PairDStreamFunctions contains

operations available only on DStreams of key-value pairs, such as

`groupByKeyAndWindow` and `join`. These operations are automatically

available on any DStream of pairs (e.g., DStream[(Int, Int)] through implicit

conversions.](https://image.slidesharecdn.com/sparkstreaming-new-160130060521/85/Apache-Spark-Streaming-Architecture-and-Fault-Tolerance-56-320.jpg)