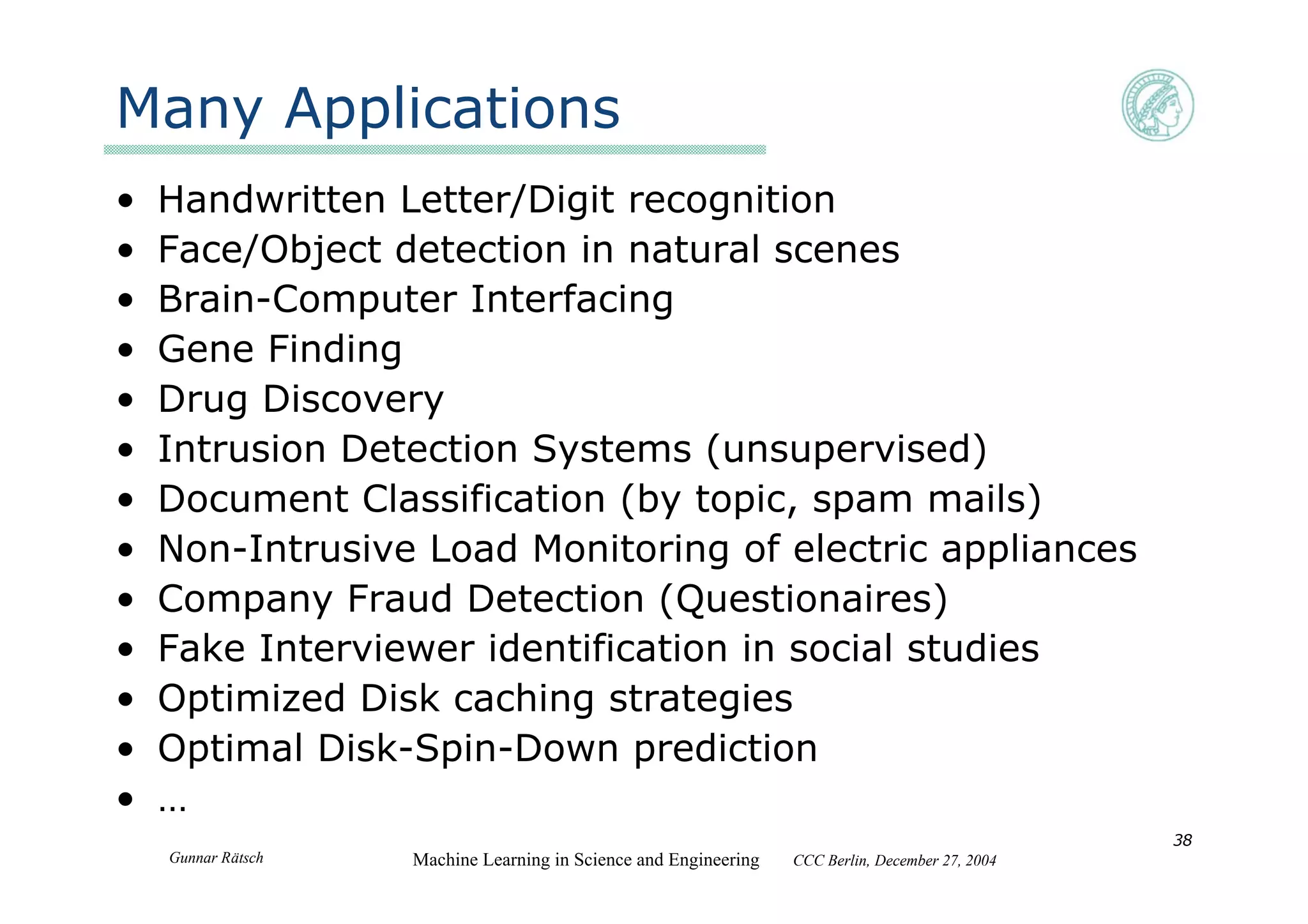

This document summarizes Gunnar Rätsch's presentation on machine learning in science and engineering. It introduces several applications of machine learning, including spam classification, drug design, and face detection. It also provides overviews of boosting algorithms like AdaBoost and support vector machines. Key algorithms like boosting, SVMs, and kernels are explained at a high level. Finally, applications of machine learning across various domains are briefly mentioned.

![Gunnar Rätsch Machine Learning in Science and Engineering CCC Berlin, December 27, 2004

51

The Cocktail Party Problem

• input: 3 mixed signals

• algorithm: enforce independence

(“independent component analysis”)

via temporal de-correlation

• output: 3 separated signals

(Demo: Andreas Ziehe, Fraunhofer FIRST, Berlin)

"Imagine that you are on the edge of a lake and a friend challenges you to play a game. The game

is this: Your friend digs two narrow channels up from the side of the lake […]. Halfway up each one,

your friend stretches a handkerchief and fastens it to the sides of the channel. As waves reach the

side of the lake they travel up the channels and cause the two handkerchiefs to go into motion. You

are allowed to look only at the handkerchiefs and from their motions to answer a series of

questions: How many boats are there on the lake and where are they? Which is the most powerful

one? Which one is closer? Is the wind blowing?” (Auditory Scene Analysis, A. Bregman )](https://image.slidesharecdn.com/machine-learning-in-science-and-engineering2278/75/Machine-Learning-in-Science-and-Engineering-51-2048.jpg)

![Gunnar Rätsch Machine Learning in Science and Engineering CCC Berlin, December 27, 2004

53

Single Trial vs. Averaging

-500 -400 -300 -200 -100 0 [ms]

-15

-10

-5

0

5

10

15

-500 -400 -300 -200 -100 0 [ms]

-15

-10

-5

0

5

10

15

[µV]

-600 -500 -400 -300 -200 -100 0 [ms]

-15

-10

-5

0

5

10

15

-600 -500 -400 -300 -200 -100 0 [ms]

-15

-10

-5

0

5

10

15

[µV]

LEFT

hand

(ch. C4)

RIGHT

hand

(ch. C3)](https://image.slidesharecdn.com/machine-learning-in-science-and-engineering2278/75/Machine-Learning-in-Science-and-Engineering-53-2048.jpg)