Parallelizing Existing R Packages

0 likes545 views

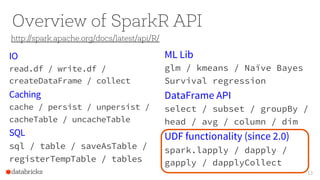

The document provides an overview of the SparkR package, detailing its architecture, API, and functionalities for integrating R with Apache Spark. It highlights key features such as data manipulation, machine learning capabilities, and functions for executing distributed computations. Additionally, it offers guidance on debugging user code and emphasizes best practices for performance optimization.

1 of 27

Downloaded 13 times

![Overview of SparkR API :: DataFrame

API

SparkR DataFrame behaves similar to R data.frames

> sparkDF$newCol <- sparkDF$col + 1

> subsetDF <- sparkDF[, c(“date”, “type”)]

> recentData <- subset(sparkDF$date == “2015-10-24”)

> firstRow <- sparkDF[[1, ]]

> names(subsetDF) <- c(“Date”, “Type”)

> dim(recentData)

> head(count(group_by(subsetDF, “Date”)))

10](https://image.slidesharecdn.com/parallelizingexistingrpackages-170310144534/85/Parallelizing-Existing-R-Packages-10-320.jpg)

Ad

Recommended

Advanced Apache Spark Meetup Spark SQL + DataFrames + Catalyst Optimizer + Da...

Advanced Apache Spark Meetup Spark SQL + DataFrames + Catalyst Optimizer + Da...Chris Fregly The document details a meetup presentation about advanced Apache Spark, focusing on Spark SQL, DataFrames, and the Catalyst optimizer, delivered by Chris Fregly from IBM. It covers the structure, functionality, and performance tuning of DataFrames and introduces custom data sources, partitioning, and optimization techniques. Additionally, it outlines upcoming meetups and future topics related to Spark technology.

Structuring Apache Spark 2.0: SQL, DataFrames, Datasets And Streaming - by Mi...

Structuring Apache Spark 2.0: SQL, DataFrames, Datasets And Streaming - by Mi...Databricks This document summarizes key aspects of structuring computation and data in Apache Spark using SQL, DataFrames, and Datasets. It discusses how structuring computation and data through these APIs enables optimizations like predicate pushdown and efficient joins. It also describes how data is encoded efficiently in Spark's internal format and how encoders translate between domain objects and Spark's internal representations. Finally, it introduces structured streaming as a high-level streaming API built on top of Spark SQL that allows running the same queries continuously on streaming data.

Extending Apache Spark – Beyond Spark Session Extensions

Extending Apache Spark – Beyond Spark Session ExtensionsDatabricks The document discusses customizing Apache Spark, focusing on a custom state store that facilitates stateful operations. It outlines three subjective levels of customization related to SQL plans, data sources/sinks, and state management, while providing technical details on state store operations and maintenance. Additionally, it includes references to resources for further reading and emphasizes the importance of user feedback.

Spark SQL Join Improvement at Facebook

Spark SQL Join Improvement at FacebookDatabricks The document discusses improvements to Spark SQL joins, focusing on enhancements for shuffled hash joins, including full outer join support and proposing the use of bloom filters to optimize performance. It details specific changes implemented in Spark 3.1, such as code generation support and strategies for better handling large data sizes. Additionally, it outlines future work, including optimizing join strategies based on historical data.

20140908 spark sql & catalyst

20140908 spark sql & catalystTakuya UESHIN This document introduces Spark SQL and the Catalyst query optimizer. It discusses that Spark SQL allows executing SQL on Spark, builds SchemaRDDs, and optimizes query execution plans. It then provides details on how Catalyst works, including its use of logical expressions, operators, and rules to transform query trees and optimize queries. Finally, it outlines some interesting open issues and how to contribute to Spark SQL's development.

InfluxDB IOx Tech Talks: Query Engine Design and the Rust-Based DataFusion in...

InfluxDB IOx Tech Talks: Query Engine Design and the Rust-Based DataFusion in...InfluxData The document discusses updates to InfluxDB IOx, a new columnar time series database. It covers changes and improvements to the API, CLI, query capabilities, and path to open sourcing builds. Key points include moving to gRPC for management, adding PostgreSQL string functions to queries, optimizing functions for scalar values and columns, and monitoring internal systems as the first step to releasing open source builds.

Spark SQL Deep Dive @ Melbourne Spark Meetup

Spark SQL Deep Dive @ Melbourne Spark MeetupDatabricks This document summarizes a presentation on Spark SQL and its capabilities. Spark SQL allows users to run SQL queries on Spark, including HiveQL queries with UDFs, UDAFs, and SerDes. It provides a unified interface for reading and writing data in various formats. Spark SQL also allows users to express common operations like selecting columns, joining data, and aggregation concisely through its DataFrame API. This reduces the amount of code users need to write compared to lower-level APIs like RDDs.

Making Structured Streaming Ready for Production

Making Structured Streaming Ready for ProductionDatabricks The document discusses structured streaming with Apache Spark, highlighting its capabilities for building robust stream processing applications and managing complex data. It elaborates on the model of treating streams as unbounded tables and introduces features like event time processing, checkpointing for fault tolerance, and seamless integration with various data sources. Additionally, it covers advanced functionalities such as stateful processing and watermarking for late data handling, showcasing Spark's performance enhancements over traditional ETL processes.

Stanford CS347 Guest Lecture: Apache Spark

Stanford CS347 Guest Lecture: Apache SparkReynold Xin The guest lecture by Reynold Xin at Stanford University discusses Spark and its advantages over MapReduce, emphasizing the simplicity of programming and performance benefits for large datasets. It introduces the concept of Resilient Distributed Datasets (RDDs) as a core abstraction in Spark, highlighting their fault-tolerance and parallel processing capabilities. The lecture also covers Spark's application in streaming, machine learning, and SQL, showcasing its versatility in data processing tasks.

Big data analytics_beyond_hadoop_public_18_july_2013

Big data analytics_beyond_hadoop_public_18_july_2013Vijay Srinivas Agneeswaran, Ph.D This document discusses alternatives to Hadoop for big data analytics. It introduces the Berkeley data analytics stack, including Spark, and compares the performance of iterative machine learning algorithms between Spark and Hadoop. It also discusses using Twitter's Storm for real-time analytics and compares the performance of Mahout and R/ML over Storm. The document provides examples of using Spark for logistic regression and k-means clustering and discusses how companies like Ooyala and Conviva have benefited from using Spark.

Easy, scalable, fault tolerant stream processing with structured streaming - ...

Easy, scalable, fault tolerant stream processing with structured streaming - ...Databricks The document discusses the complexities of building scalable and fault-tolerant stream processing applications using Structured Streaming with Apache Spark. It highlights key features such as handling diverse data formats and storage systems, ensuring exactly-once processing semantics, and leveraging Spark SQL for incremental execution. The presentation covers practical examples and concepts like triggers, output modes, and watermarking to efficiently manage state and late data in streaming queries.

Spark meetup v2.0.5

Spark meetup v2.0.5Yan Zhou The document discusses Huawei Technologies' implementation of Spark SQL on HBase, highlighting its motivations, performance capabilities, and future roadmap. It details the unique data challenges in telecommunications and how the technology can handle complex query types efficiently. Additionally, the paper reviews performance metrics, optimizations, and contributions to the Spark ecosystem from Huawei's big data teams.

Sparkcamp @ Strata CA: Intro to Apache Spark with Hands-on Tutorials

Sparkcamp @ Strata CA: Intro to Apache Spark with Hands-on TutorialsDatabricks The document provides an outline for the Spark Camp @ Strata CA tutorial. The morning session will cover introductions and getting started with Spark, an introduction to MLlib, and exercises on working with Spark on a cluster and notebooks. The afternoon session will cover Spark SQL, visualizations, Spark streaming, building Scala applications, and GraphX examples. The tutorial will be led by several instructors from Databricks and include hands-on coding exercises.

Apache spark basics

Apache spark basicssparrowAnalytics.com Apache Spark is an open source Big Data analytical framework. It introduces the concept of RDDs (Resilient Distributed Datasets) which allow parallel operations on large datasets. The document discusses starting Spark, Spark applications, transformations and actions on RDDs, RDD creation in Scala and Python, and examples including word count. It also covers flatMap vs map, custom methods, and assignments involving transformations on lists.

Spark SQL - 10 Things You Need to Know

Spark SQL - 10 Things You Need to KnowKristian Alexander The document discusses loading data into Spark SQL and the differences between DataFrame functions and SQL. It provides examples of loading data from files, cloud storage, and directly into DataFrames from JSON and Parquet files. It also demonstrates using SQL on DataFrames after registering them as temporary views. The document outlines how to load data into RDDs and convert them to DataFrames to enable SQL querying, as well as using SQL-like functions directly in the DataFrame API.

DataEngConf SF16 - Spark SQL Workshop

DataEngConf SF16 - Spark SQL WorkshopHakka Labs The document outlines an agenda for an Apache Spark workshop, including topics such as Spark SQL, RDD operations, and user-defined functions (UDFs). It discusses the framework's features, advantages of using schemas in Spark SQL, and practical coding sessions. Additionally, the document addresses caching data and different data formats for reading and writing within Spark, emphasizing efficiency and productivity for developers and data scientists.

SparkR: Enabling Interactive Data Science at Scale

SparkR: Enabling Interactive Data Science at Scalejeykottalam The document discusses SparkR, which enables interactive data science using R on Apache Spark clusters. SparkR allows users to create and manipulate resilient distributed datasets (RDDs) from R and run R analytics functions in parallel on large datasets. It provides examples of using SparkR for tasks like word counting on text data and digit classification using the MNIST dataset. The API is designed to be similar to PySpark for ease of use.

SparkR - Play Spark Using R (20160909 HadoopCon)

SparkR - Play Spark Using R (20160909 HadoopCon)wqchen The document provides a comprehensive overview of 'sparkr', an R interface for Apache Spark, highlighting its origins, features, and usage. It covers key concepts such as data frames, RDDs, machine learning libraries, and practical applications for data analysis and visualization using R. Additionally, it discusses the future development plans for sparkr and includes various references and resources for further learning.

SparkSQL: A Compiler from Queries to RDDs

SparkSQL: A Compiler from Queries to RDDsDatabricks The document presents an overview of SparkSQL, detailing its transformation from queries to Resilient Distributed Datasets (RDDs) and discussing the effective use of high-level APIs for optimized query execution. It covers crucial concepts such as Abstract Syntax Trees (AST), logical and physical query plans, as well as optimization techniques like predicate pushdown and whole-stage code generation to enhance performance. Future developments for Spark include the introduction of cost-based optimizers and improvements for better performance on many-core machines.

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...Databricks The document provides insights on optimizing Apache Spark jobs by understanding key functional elements such as shuffling, appropriate use of operations like reduceByKey versus groupByKey, and how to handle joins effectively. It emphasizes the importance of understanding Spark's internal workings to write efficient, well-tested, and reusable jobs while highlighting common pitfalls and best practices. Additionally, it discusses strategies for reusing code between batch and streaming processes, testing scenarios in Spark, and diagnosing performance issues.

Apache Spark - Dataframes & Spark SQL - Part 2 | Big Data Hadoop Spark Tutori...

Apache Spark - Dataframes & Spark SQL - Part 2 | Big Data Hadoop Spark Tutori...CloudxLab The document provides an overview of using Spark SQL with DataFrames, focusing on loading and displaying data from XML, Avro, and Parquet formats. It describes the necessary setup, including the use of Spark packages and JDBC for accessing databases, while also highlighting Spark's compatibility with Hive tables. Additionally, it touches on the use of a distributed SQL engine through a JDBC/ODBC server setup.

Apache Carbondata: An Indexed Columnar File Format for Interactive Query with...

Apache Carbondata: An Indexed Columnar File Format for Interactive Query with...Spark Summit Apache CarbonData is an indexed columnar file format designed for interactive querying with Spark SQL, offering efficient data management for both OLAP and detailed queries. It features a unique multi-dimensional indexing structure and is geared towards optimizing storage and query performance, particularly in large datasets. The framework supports various operations including data loading, querying, updating, and deletion, with significant performance advantages over traditional formats like Parquet.

Spark etl

Spark etlImran Rashid The document provides tips for writing effective ETL pipelines using Apache Spark, emphasizing the importance of modularity, error handling, and understanding performance. Key concepts such as RDDs, error catching with accumulators, and best practices for data partitioning and transformation are discussed. Additionally, the document highlights the necessity of minimizing data volume and monitoring stage boundaries for optimizing Spark applications.

Easy, scalable, fault tolerant stream processing with structured streaming - ...

Easy, scalable, fault tolerant stream processing with structured streaming - ...Databricks The document discusses structured streaming with Apache Spark, focusing on its ease of use, scalability, and fault tolerance for stream processing applications. It highlights key features such as data integration from various sources, checkpointing for fault tolerance, and advanced transformations to handle complex workloads and data types. The presentation also covers practical examples of implementing streaming queries, event-time aggregations, and stateful processing with benefits for real-time analytics and decision-making.

Spark SQL Tutorial | Spark Tutorial for Beginners | Apache Spark Training | E...

Spark SQL Tutorial | Spark Tutorial for Beginners | Apache Spark Training | E...Edureka! The document provides an extensive overview of Spark SQL, including its advantages over Apache Hive, features, architecture, and libraries. It discusses how Spark SQL improves performance through real-time querying and integration with various data formats, while offering practical use cases like Twitter sentiment analysis and stock market data analysis. Additionally, it outlines the steps for initializing a Spark shell, creating datasets, and executing SQL operations within Spark.

Road to Analytics

Road to AnalyticsDatio Big Data This document provides an overview comparison of SAS and Spark for analytics. SAS is a commercial software while Spark is an open source framework. SAS uses datasets that reside in memory while Spark uses resilient distributed datasets (RDDs) that can scale across clusters. Both support SQL queries but Spark SQL allows querying distributed data lazily. Spark also provides machine learning APIs through MLlib that can perform tasks like classification, clustering, and recommendation at scale.

From HelloWorld to Configurable and Reusable Apache Spark Applications in Sca...

From HelloWorld to Configurable and Reusable Apache Spark Applications in Sca...Databricks The document presents a session by Oliver Țupran on creating configurable and reusable Apache Spark applications using Scala, focusing on common problems and solutions related to application logic and configuration. Key solutions include separating business logic from configuration, utilizing a robust configurator framework, and applying testing methodologies. The session aims to enhance productivity while minimizing complexity in Spark application development.

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...Spark Summit This document summarizes Project Tungsten, an effort by Databricks to substantially improve the memory and CPU efficiency of Spark applications. It discusses how Tungsten optimizes memory and CPU usage through techniques like explicit memory management, cache-aware algorithms, and code generation. It provides examples of how these optimizations improve performance for aggregation queries and record sorting. The roadmap outlines expanding Tungsten's optimizations in Spark 1.4 through 1.6 to support more workloads and achieve end-to-end processing using binary data representations.

Parallelizing Existing R Packages with SparkR

Parallelizing Existing R Packages with SparkRDatabricks The document discusses the use of SparkR, an R package integrated with Apache Spark, which facilitates interoperability between R and Spark dataframes. It details the architecture of SparkR, its API functionalities for data manipulation and machine learning, as well as techniques for parallelizing computations with functions like spark.lapply, dapply, and gapply. The document also addresses considerations for debugging and managing package installations on SparkR workers.

Parallelize R Code Using Apache Spark

Parallelize R Code Using Apache Spark Databricks The document outlines the use of the Sparkr R package, which interfaces R with Apache Spark for distributed data processing. It discusses various APIs and functions available in Sparkr, such as spark.lapply, dapply, and gapply, along with best practices for parallelizing R code effectively. Additionally, it highlights the importance of managing data sizes, package imports on worker nodes, and debugging techniques in a Spark environment.

More Related Content

What's hot (20)

Stanford CS347 Guest Lecture: Apache Spark

Stanford CS347 Guest Lecture: Apache SparkReynold Xin The guest lecture by Reynold Xin at Stanford University discusses Spark and its advantages over MapReduce, emphasizing the simplicity of programming and performance benefits for large datasets. It introduces the concept of Resilient Distributed Datasets (RDDs) as a core abstraction in Spark, highlighting their fault-tolerance and parallel processing capabilities. The lecture also covers Spark's application in streaming, machine learning, and SQL, showcasing its versatility in data processing tasks.

Big data analytics_beyond_hadoop_public_18_july_2013

Big data analytics_beyond_hadoop_public_18_july_2013Vijay Srinivas Agneeswaran, Ph.D This document discusses alternatives to Hadoop for big data analytics. It introduces the Berkeley data analytics stack, including Spark, and compares the performance of iterative machine learning algorithms between Spark and Hadoop. It also discusses using Twitter's Storm for real-time analytics and compares the performance of Mahout and R/ML over Storm. The document provides examples of using Spark for logistic regression and k-means clustering and discusses how companies like Ooyala and Conviva have benefited from using Spark.

Easy, scalable, fault tolerant stream processing with structured streaming - ...

Easy, scalable, fault tolerant stream processing with structured streaming - ...Databricks The document discusses the complexities of building scalable and fault-tolerant stream processing applications using Structured Streaming with Apache Spark. It highlights key features such as handling diverse data formats and storage systems, ensuring exactly-once processing semantics, and leveraging Spark SQL for incremental execution. The presentation covers practical examples and concepts like triggers, output modes, and watermarking to efficiently manage state and late data in streaming queries.

Spark meetup v2.0.5

Spark meetup v2.0.5Yan Zhou The document discusses Huawei Technologies' implementation of Spark SQL on HBase, highlighting its motivations, performance capabilities, and future roadmap. It details the unique data challenges in telecommunications and how the technology can handle complex query types efficiently. Additionally, the paper reviews performance metrics, optimizations, and contributions to the Spark ecosystem from Huawei's big data teams.

Sparkcamp @ Strata CA: Intro to Apache Spark with Hands-on Tutorials

Sparkcamp @ Strata CA: Intro to Apache Spark with Hands-on TutorialsDatabricks The document provides an outline for the Spark Camp @ Strata CA tutorial. The morning session will cover introductions and getting started with Spark, an introduction to MLlib, and exercises on working with Spark on a cluster and notebooks. The afternoon session will cover Spark SQL, visualizations, Spark streaming, building Scala applications, and GraphX examples. The tutorial will be led by several instructors from Databricks and include hands-on coding exercises.

Apache spark basics

Apache spark basicssparrowAnalytics.com Apache Spark is an open source Big Data analytical framework. It introduces the concept of RDDs (Resilient Distributed Datasets) which allow parallel operations on large datasets. The document discusses starting Spark, Spark applications, transformations and actions on RDDs, RDD creation in Scala and Python, and examples including word count. It also covers flatMap vs map, custom methods, and assignments involving transformations on lists.

Spark SQL - 10 Things You Need to Know

Spark SQL - 10 Things You Need to KnowKristian Alexander The document discusses loading data into Spark SQL and the differences between DataFrame functions and SQL. It provides examples of loading data from files, cloud storage, and directly into DataFrames from JSON and Parquet files. It also demonstrates using SQL on DataFrames after registering them as temporary views. The document outlines how to load data into RDDs and convert them to DataFrames to enable SQL querying, as well as using SQL-like functions directly in the DataFrame API.

DataEngConf SF16 - Spark SQL Workshop

DataEngConf SF16 - Spark SQL WorkshopHakka Labs The document outlines an agenda for an Apache Spark workshop, including topics such as Spark SQL, RDD operations, and user-defined functions (UDFs). It discusses the framework's features, advantages of using schemas in Spark SQL, and practical coding sessions. Additionally, the document addresses caching data and different data formats for reading and writing within Spark, emphasizing efficiency and productivity for developers and data scientists.

SparkR: Enabling Interactive Data Science at Scale

SparkR: Enabling Interactive Data Science at Scalejeykottalam The document discusses SparkR, which enables interactive data science using R on Apache Spark clusters. SparkR allows users to create and manipulate resilient distributed datasets (RDDs) from R and run R analytics functions in parallel on large datasets. It provides examples of using SparkR for tasks like word counting on text data and digit classification using the MNIST dataset. The API is designed to be similar to PySpark for ease of use.

SparkR - Play Spark Using R (20160909 HadoopCon)

SparkR - Play Spark Using R (20160909 HadoopCon)wqchen The document provides a comprehensive overview of 'sparkr', an R interface for Apache Spark, highlighting its origins, features, and usage. It covers key concepts such as data frames, RDDs, machine learning libraries, and practical applications for data analysis and visualization using R. Additionally, it discusses the future development plans for sparkr and includes various references and resources for further learning.

SparkSQL: A Compiler from Queries to RDDs

SparkSQL: A Compiler from Queries to RDDsDatabricks The document presents an overview of SparkSQL, detailing its transformation from queries to Resilient Distributed Datasets (RDDs) and discussing the effective use of high-level APIs for optimized query execution. It covers crucial concepts such as Abstract Syntax Trees (AST), logical and physical query plans, as well as optimization techniques like predicate pushdown and whole-stage code generation to enhance performance. Future developments for Spark include the introduction of cost-based optimizers and improvements for better performance on many-core machines.

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...Databricks The document provides insights on optimizing Apache Spark jobs by understanding key functional elements such as shuffling, appropriate use of operations like reduceByKey versus groupByKey, and how to handle joins effectively. It emphasizes the importance of understanding Spark's internal workings to write efficient, well-tested, and reusable jobs while highlighting common pitfalls and best practices. Additionally, it discusses strategies for reusing code between batch and streaming processes, testing scenarios in Spark, and diagnosing performance issues.

Apache Spark - Dataframes & Spark SQL - Part 2 | Big Data Hadoop Spark Tutori...

Apache Spark - Dataframes & Spark SQL - Part 2 | Big Data Hadoop Spark Tutori...CloudxLab The document provides an overview of using Spark SQL with DataFrames, focusing on loading and displaying data from XML, Avro, and Parquet formats. It describes the necessary setup, including the use of Spark packages and JDBC for accessing databases, while also highlighting Spark's compatibility with Hive tables. Additionally, it touches on the use of a distributed SQL engine through a JDBC/ODBC server setup.

Apache Carbondata: An Indexed Columnar File Format for Interactive Query with...

Apache Carbondata: An Indexed Columnar File Format for Interactive Query with...Spark Summit Apache CarbonData is an indexed columnar file format designed for interactive querying with Spark SQL, offering efficient data management for both OLAP and detailed queries. It features a unique multi-dimensional indexing structure and is geared towards optimizing storage and query performance, particularly in large datasets. The framework supports various operations including data loading, querying, updating, and deletion, with significant performance advantages over traditional formats like Parquet.

Spark etl

Spark etlImran Rashid The document provides tips for writing effective ETL pipelines using Apache Spark, emphasizing the importance of modularity, error handling, and understanding performance. Key concepts such as RDDs, error catching with accumulators, and best practices for data partitioning and transformation are discussed. Additionally, the document highlights the necessity of minimizing data volume and monitoring stage boundaries for optimizing Spark applications.

Easy, scalable, fault tolerant stream processing with structured streaming - ...

Easy, scalable, fault tolerant stream processing with structured streaming - ...Databricks The document discusses structured streaming with Apache Spark, focusing on its ease of use, scalability, and fault tolerance for stream processing applications. It highlights key features such as data integration from various sources, checkpointing for fault tolerance, and advanced transformations to handle complex workloads and data types. The presentation also covers practical examples of implementing streaming queries, event-time aggregations, and stateful processing with benefits for real-time analytics and decision-making.

Spark SQL Tutorial | Spark Tutorial for Beginners | Apache Spark Training | E...

Spark SQL Tutorial | Spark Tutorial for Beginners | Apache Spark Training | E...Edureka! The document provides an extensive overview of Spark SQL, including its advantages over Apache Hive, features, architecture, and libraries. It discusses how Spark SQL improves performance through real-time querying and integration with various data formats, while offering practical use cases like Twitter sentiment analysis and stock market data analysis. Additionally, it outlines the steps for initializing a Spark shell, creating datasets, and executing SQL operations within Spark.

Road to Analytics

Road to AnalyticsDatio Big Data This document provides an overview comparison of SAS and Spark for analytics. SAS is a commercial software while Spark is an open source framework. SAS uses datasets that reside in memory while Spark uses resilient distributed datasets (RDDs) that can scale across clusters. Both support SQL queries but Spark SQL allows querying distributed data lazily. Spark also provides machine learning APIs through MLlib that can perform tasks like classification, clustering, and recommendation at scale.

From HelloWorld to Configurable and Reusable Apache Spark Applications in Sca...

From HelloWorld to Configurable and Reusable Apache Spark Applications in Sca...Databricks The document presents a session by Oliver Țupran on creating configurable and reusable Apache Spark applications using Scala, focusing on common problems and solutions related to application logic and configuration. Key solutions include separating business logic from configuration, utilizing a robust configurator framework, and applying testing methodologies. The session aims to enhance productivity while minimizing complexity in Spark application development.

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...

Deep Dive into Project Tungsten: Bringing Spark Closer to Bare Metal-(Josh Ro...Spark Summit This document summarizes Project Tungsten, an effort by Databricks to substantially improve the memory and CPU efficiency of Spark applications. It discusses how Tungsten optimizes memory and CPU usage through techniques like explicit memory management, cache-aware algorithms, and code generation. It provides examples of how these optimizations improve performance for aggregation queries and record sorting. The roadmap outlines expanding Tungsten's optimizations in Spark 1.4 through 1.6 to support more workloads and achieve end-to-end processing using binary data representations.

Similar to Parallelizing Existing R Packages (20)

Parallelizing Existing R Packages with SparkR

Parallelizing Existing R Packages with SparkRDatabricks The document discusses the use of SparkR, an R package integrated with Apache Spark, which facilitates interoperability between R and Spark dataframes. It details the architecture of SparkR, its API functionalities for data manipulation and machine learning, as well as techniques for parallelizing computations with functions like spark.lapply, dapply, and gapply. The document also addresses considerations for debugging and managing package installations on SparkR workers.

Parallelize R Code Using Apache Spark

Parallelize R Code Using Apache Spark Databricks The document outlines the use of the Sparkr R package, which interfaces R with Apache Spark for distributed data processing. It discusses various APIs and functions available in Sparkr, such as spark.lapply, dapply, and gapply, along with best practices for parallelizing R code effectively. Additionally, it highlights the importance of managing data sizes, package imports on worker nodes, and debugging techniques in a Spark environment.

Enabling exploratory data science with Spark and R

Enabling exploratory data science with Spark and RDatabricks The document discusses the integration of Apache Spark with R through the sparkr package, which facilitates the use of R's data manipulation capabilities alongside Spark's distributed computing. It highlights Spark's features such as real-time streaming, machine learning, and scalability, while addressing R's limitations in handling large datasets. The document also provides an overview of the sparkr architecture and outlines a roadmap for future features and use cases in exploratory data analysis.

A Data Frame Abstraction Layer for SparkR-(Chris Freeman, Alteryx)

A Data Frame Abstraction Layer for SparkR-(Chris Freeman, Alteryx)Spark Summit SparkR provides an R interface to Apache Spark that allows R developers to leverage Spark's distributed processing capabilities through DataFrames. DataFrames impose a schema on data from RDDs, making the data easier to access and manipulate compared to raw RDDs. DataFrames in SparkR allow R-like syntax and interactions with data while leveraging Spark's optimizations by passing operations to the JVM. The speaker demonstrated SparkR DataFrame features and discussed the project's roadmap, including expanding SparkR to support Spark machine learning capabilities.

Introduction to SparkR

Introduction to SparkRAnkara Big Data Meetup SparkR is an R package that provides an interface to Apache Spark to enable large scale data analysis from R. It introduces the concept of distributed data frames that allow users to manipulate large datasets using familiar R syntax. SparkR improves performance over large datasets by using lazy evaluation and Spark's relational query optimizer. It also supports over 100 functions on data frames for tasks like statistical analysis, string manipulation, and date operations.

Introduction to SparkR

Introduction to SparkROlgun Aydın SparkR is an R package that provides an interface to Apache Spark to enable large scale data analysis from R. It introduces the concept of distributed data frames that allow users to manipulate large datasets using familiar R syntax. SparkR improves performance over large datasets by using lazy evaluation and Spark's relational query optimizer. It also supports over 100 functions on data frames for tasks like statistical analysis, string manipulation, and date operations.

Enabling Exploratory Analysis of Large Data with Apache Spark and R

Enabling Exploratory Analysis of Large Data with Apache Spark and RDatabricks The document discusses the integration of R with Apache Spark, highlighting the benefits and capabilities of the 'SparkR' package, which allows for distributed computing in R. It introduces key speakers from Databricks, covers the architecture of SparkR, and demonstrates its applications through various examples. Additionally, it outlines how data scientists can leverage SparkR for efficient data manipulation and analysis without local storage constraints.

Scalable Data Science with SparkR: Spark Summit East talk by Felix Cheung

Scalable Data Science with SparkR: Spark Summit East talk by Felix CheungSpark Summit The document provides an overview of SparkR, an R programming interface for Apache Spark, detailing its features, architectural components, and machine learning capabilities. It discusses the integration of R with Spark's distributed computing framework, focusing on user-defined functions (UDFs), the machine learning pipeline, and various model types available in SparkR. It also outlines challenges faced by SparkR users and upcoming improvements planned for future versions.

SparkR: The Past, the Present and the Future-(Shivaram Venkataraman and Rui S...

SparkR: The Past, the Present and the Future-(Shivaram Venkataraman and Rui S...Spark Summit SparkR allows users to perform large-scale data analysis from R. It provides a unified approach for working with big data using DataFrames. The current release of SparkR includes DataFrame support released in Spark 1.4 for improved performance. Future developments will expand SparkR's capabilities for large-scale machine learning, simple parallel programming APIs, and tighter integration with existing R packages. The open source project has an growing community of over 20 contributors working to advance SparkR.

Scalable Data Science with SparkR

Scalable Data Science with SparkRDataWorks Summit The document presents an overview of SparkR, an R package that enables the use of Apache Spark functionalities through R's familiar syntax and capabilities for data science tasks. It highlights the architectural framework, features, machine learning integration, user-defined functions, and challenges associated with SparkR. The document also discusses upcoming features and improvements in future versions of SparkR.

Strata NYC 2015 - Supercharging R with Apache Spark

Strata NYC 2015 - Supercharging R with Apache SparkDatabricks The document discusses the integration of R with Apache Spark, highlighting Spark's capabilities in real-time streaming, machine learning, SQL, and graph processing. It introduces the SparkR package, which allows R users to manipulate and analyze large datasets using Spark's distributed computing power. The document emphasizes the advantages of combining R's rich ecosystem and flexibility with Spark's scalability and performance.

Big data analysis using spark r published

Big data analysis using spark r publishedDipendra Kusi SparkR enables large scale data analysis from R by leveraging Apache Spark's distributed processing capabilities. It allows users to load large datasets from sources like HDFS, run operations like filtering and aggregation in parallel, and build machine learning models like k-means clustering. SparkR also supports data visualization and exploration through packages like ggplot2. By running R programs on Spark, users can analyze datasets that are too large for a single machine.

Machine Learning with SparkR

Machine Learning with SparkROlgun Aydın SparkR provides an R frontend for the Apache Spark distributed computing framework. It allows users to perform large-scale data analysis from R by leveraging Spark's distributed execution engine and in-memory cluster computing capabilities. Key features of SparkR include distributed DataFrames that operate similarly to local R data frames but can scale to large datasets, over 100 built-in functions for data wrangling and analysis, and the ability to load data from sources like HDFS, HBase and Parquet files. Common machine learning algorithms like correlation analysis, k-means clustering and decision trees can be implemented using SparkR.

Sparkr sigmod

Sparkr sigmodwaqasm86 This summary provides an overview of the SparkR package, which provides an R frontend for the Apache Spark distributed computing framework:

- SparkR enables large-scale data analysis from the R shell by using Spark's distributed computation engine to parallelize and optimize R programs. It allows R users to leverage Spark's libraries, data sources, and optimizations while programming in R.

- The central component of SparkR is the distributed DataFrame, which provides a familiar data frame interface to R users but can handle large datasets using Spark. DataFrame operations are optimized using Spark's query optimizer.

- SparkR's architecture includes an R to JVM binding that allows R programs to submit jobs to Spark, and support for running R execut

Data processing with spark in r & python

Data processing with spark in r & pythonMaloy Manna, PMP® The document discusses the use of Apache Spark as a general-purpose engine for big data processing in R and Python, highlighting its advantages over Hadoop and MapReduce. It covers topics such as data processing operations, RDD transformations and actions, and the use of Spark SQL and DataFrames for structured data processing. The document also provides installation instructions, tips for efficient data handling, and various resources for further learning.

Apache spark-melbourne-april-2015-meetup

Apache spark-melbourne-april-2015-meetupNed Shawa This document provides an agenda and summaries for a meetup on introducing DataFrames and R on Apache Spark. The agenda includes overviews of Apache Spark 1.3, DataFrames, R on Spark, and large scale machine learning on Spark. There will also be discussions on news items, contributions so far, what's new in Spark 1.3, more data source APIs, what DataFrames are, writing DataFrames, and DataFrames with RDDs and Parquet. Presentations will cover Spark components, an introduction to SparkR, and Spark machine learning experiences.

SparkR best practices for R data scientist

SparkR best practices for R data scientistDataWorks Summit The document discusses the integration of R with Apache Spark through SparkR, emphasizing its applications in data science workflows for large datasets and machine learning. It highlights the advantages of using R, such as its open-source nature and extensive package ecosystem, while addressing limitations when handling big data. Future directions for improvement in SparkR are also outlined, including enhanced performance and scalability of machine learning algorithms.

SparkR Best Practices for R Data Scientists

SparkR Best Practices for R Data ScientistsDataWorks Summit The document outlines best practices for using SparkR to enhance data science workflows, highlighting the interoperability between R and Apache Spark. It discusses the advantages and limitations of R, and details specific operations like data wrangling, sampling algorithms, and user-defined functions that enable efficient data processing in large datasets. Future improvements for SparkR include enhancing performance for data collection and UDFs, along with expanded machine learning capabilities.

Recent Developments In SparkR For Advanced Analytics

Recent Developments In SparkR For Advanced AnalyticsDatabricks The document discusses the SparkR package, which bridges the gap between R and big data analytics, providing descriptive and predictive analytics on large datasets. It outlines the architecture, features, and implementation of SparkR, including data frame operations, statistical computations, and integration with Spark's machine learning library. Future directions for SparkR include improving API consistency, enhancing R formula support, and integrating more machine learning algorithms.

Data Summer Conf 2018, “Hands-on with Apache Spark for Beginners (ENG)” — Akm...

Data Summer Conf 2018, “Hands-on with Apache Spark for Beginners (ENG)” — Akm...Provectus The document provides an overview of Apache Spark, focusing on its data processing capabilities and the use of DataFrames, transformations, and actions. It covers the execution of code in notebooks, Spark context communication, and various operations like select, filter, and groupby, as well as concepts such as caching and user-defined functions. Additionally, it discusses challenges related to lazy evaluation and strategies for improving code readability.

Ad

Recently uploaded (20)

Step by step guide to install Flutter and Dart

Step by step guide to install Flutter and DartS Pranav (Deepu) Flutter is basically Google’s portable user

interface (UI) toolkit, used to build and

develop eye-catching, natively-built

applications for mobile, desktop, and web,

from a single codebase. Flutter is free, open-

sourced, and compatible with existing code. It

is utilized by companies and developers

around the world, due to its user-friendly

interface and fairly simple, yet to-the-point

commands.

How the US Navy Approaches DevSecOps with Raise 2.0

How the US Navy Approaches DevSecOps with Raise 2.0Anchore Join us as Anchore's solutions architect reveals how the U.S. Navy successfully approaches the shift left philosophy to DevSecOps with the RAISE 2.0 Implementation Guide to support its Cyber Ready initiative. This session will showcase practical strategies for defense application teams to pivot from a time-intensive compliance checklist and mindset to continuous cyber-readiness with real-time visibility.

Learn how to break down organizational silos through RAISE 2.0 principles and build efficient, secure pipeline automation that produces the critical security artifacts needed for Authorization to Operate (ATO) approval across military environments.

Milwaukee Marketo User Group June 2025 - Optimize and Enhance Efficiency - Sm...

Milwaukee Marketo User Group June 2025 - Optimize and Enhance Efficiency - Sm...BradBedford3 Inspired by the Adobe Summit hands-on lab, Optimize Your Marketo Instance Performance, review the recording from June 5th to learn best practices that can optimize your smart campaign and smart list processing time, inefficient practices to try to avoid, and tips and tricks for keeping your instance running smooth!

You will learn:

How smart campaign queueing works, how flow steps are prioritized, and configurations that slow down smart campaign processing.

Best practices for smart list and smart campaign configurations that yield greater reliability and processing efficiencies.

Generally recommended timelines for reviewing instance performance: walk away from this session with a guideline of what to review in Marketo and how often to review it.

This session will be helpful for any Marketo administrator looking for opportunities to improve and streamline their instance performance. Be sure to watch to learn best practices and connect with your local Marketo peers!

Advanced Token Development - Decentralized Innovation

Advanced Token Development - Decentralized Innovationarohisinghas720 The world of blockchain is evolving at a fast pace, and at the heart of this transformation lies advanced token development. No longer limited to simple digital assets, today’s tokens are programmable, dynamic, and play a crucial role in driving decentralized applications across finance, governance, gaming, and beyond.

MOVIE RECOMMENDATION SYSTEM, UDUMULA GOPI REDDY, Y24MC13085.pptx

MOVIE RECOMMENDATION SYSTEM, UDUMULA GOPI REDDY, Y24MC13085.pptxMaharshi Mallela Movie recommendation system is a software application or algorithm designed to suggest movies to users based on their preferences, viewing history, or other relevant factors. The primary goal of such a system is to enhance user experience by providing personalized and relevant movie suggestions.

Software Engineering Process, Notation & Tools Introduction - Part 3

Software Engineering Process, Notation & Tools Introduction - Part 3Gaurav Sharma Software Engineering Process, Notation & Tools Introduction

Zoneranker’s Digital marketing solutions

Zoneranker’s Digital marketing solutionsreenashriee Zoneranker offers expert digital marketing services tailored for businesses in Theni. From SEO and PPC to social media and content marketing, we help you grow online. Partner with us to boost visibility, leads, and sales.

What is data visualization and how data visualization tool can help.pdf

What is data visualization and how data visualization tool can help.pdfVarsha Nayak An open source data visualization tool enhances this process by providing flexible, cost-effective solutions that allow users to customize and scale their visualizations according to their needs. These tools enable organizations to make data-driven decisions with complete freedom from proprietary software limitations. Whether you're a data scientist, marketer, or business leader, understanding how to utilize an open source data visualization tool can significantly improve your ability to communicate insights effectively.

Transmission Media. (Computer Networks)

Transmission Media. (Computer Networks)S Pranav (Deepu) INTRODUCTION:TRANSMISSION MEDIA

• A transmission media in data communication is a physical path between the sender and

the receiver and it is the channel through which data can be sent from one location to

another. Data can be represented through signals by computers and other sorts of

telecommunication devices. These are transmitted from one device to another in the

form of electromagnetic signals. These Electromagnetic signals can move from one

sender to another receiver through a vacuum, air, or other transmission media.

Electromagnetic energy mainly includes radio waves, visible light, UV light, and gamma

ra

Integrating Survey123 and R&H Data Using FME

Integrating Survey123 and R&H Data Using FMESafe Software West Virginia Department of Transportation (WVDOT) actively engages in several field data collection initiatives using Collector and Survey 123. A critical component for effective asset management and enhanced analytical capabilities is the integration of Geographic Information System (GIS) data with Linear Referencing System (LRS) data. Currently, RouteID and Measures are not captured in Survey 123. However, we can bridge this gap through FME Flow automation. When a survey is submitted through Survey 123 for ArcGIS Portal (10.8.1), it triggers FME Flow automation. This process uses a customized workbench that interacts with a modified version of Esri's Geometry to Measure API. The result is a JSON response that includes RouteID and Measures, which are then applied to the feature service record.

Smart Financial Solutions: Money Lender Software, Daily Pigmy & Personal Loan...

Smart Financial Solutions: Money Lender Software, Daily Pigmy & Personal Loan...Intelli grow Explore innovative tools tailored for modern finance with our Money Lender Software Development, efficient Daily Pigmy Collection Software, and streamlined Personal Loan Software. This presentation showcases how these solutions simplify loan management, boost collection efficiency, and enhance customer experience for NBFCs, microfinance firms, and individual lenders.

GDG Douglas - Google AI Agents: Your Next Intern?

GDG Douglas - Google AI Agents: Your Next Intern?felipeceotto Presentation done at the GDG Douglas event for June 2025.

A first look at Google's new Agent Development Kit.

Agent Development Kit is a new open-source framework from Google designed to simplify the full stack end-to-end development of agents and multi-agent systems.

FME as an Orchestration Tool - Peak of Data & AI 2025

FME as an Orchestration Tool - Peak of Data & AI 2025Safe Software Processing huge amounts of data through FME can have performance consequences, but as an orchestration tool, FME is brilliant! We'll take a look at the principles of data gravity, best practices, pros, cons, tips and tricks. And of course all spiced up with relevant examples!

Wondershare PDFelement Pro 11.4.20.3548 Crack Free Download

Wondershare PDFelement Pro 11.4.20.3548 Crack Free DownloadPuppy jhon ➡ 🌍📱👉COPY & PASTE LINK👉👉👉 ➤ ➤➤ https://drfiles.net/

Wondershare PDFelement Professional is professional software that can edit PDF files. This digital tool can manipulate elements in PDF documents.

AI-Powered Compliance Solutions for Global Regulations | Certivo

AI-Powered Compliance Solutions for Global Regulations | Certivocertivoai Certivo offers AI-powered compliance solutions designed to help businesses in the USA, EU, and UK simplify complex regulatory demands. From environmental and product compliance to safety, quality, and sustainability, our platform automates supplier documentation, manages certifications, and integrates with ERP/PLM systems. Ensure seamless RoHS, REACH, PFAS, and Prop 65 compliance through predictive insights and multilingual support. Turn compliance into a competitive edge with Certivo’s intelligent, scalable, and audit-ready platform.

Who will create the languages of the future?

Who will create the languages of the future?Jordi Cabot Will future languages be created by language engineers?

Can you "vibe" a DSL?

In this talk, we will explore the changing landscape of language engineering and discuss how Artificial Intelligence and low-code/no-code techniques can play a role in this future by helping in the definition, use, execution, and testing of new languages. Even empowering non-tech users to create their own language infrastructure. Maybe without them even realizing.

Software Engineering Process, Notation & Tools Introduction - Part 4

Software Engineering Process, Notation & Tools Introduction - Part 4Gaurav Sharma Software Engineering Process, Notation & Tools Introduction

IBM Rational Unified Process For Software Engineering - Introduction

IBM Rational Unified Process For Software Engineering - IntroductionGaurav Sharma IBM Rational Unified Process For Software Engineering

Open Source Software Development Methods

Open Source Software Development MethodsVICTOR MAESTRE RAMIREZ Open Source Software Development Methods

Ad

Parallelizing Existing R Packages

- 1. Parallelizing Existing R Packages with SparkR Hossein Falaki @mhfalaki

- 2. About me • Former Data Scientist at Apple Siri • Software Engineer at Databricks • Started using Apache Spark since version 0.6 • Developed first version of Apache Spark CSV data source • Worked on SparkR &Databricks R Notebook feature • Currently focusing on R experience at Databricks 2

- 3. What is SparkR? An R package distributed with Apache Spark (soon CRAN): - Provides R frontend to Spark - Exposes Spark DataFrames (inspired by R and Pandas) - Convenient interoperability between R and Spark DataFrames 3 distributed/robust processing, data sources, off-memory data structures + Dynamic environment, interactivity, packages, visualization

- 5. SparkR architecture (since 2.0) 5 Spark Driver R JVM R Backend JVM Worker JVM Worker Data Sources R R

- 6. Overview of SparkR API IO read.df / write.df / createDataFrame / collect Caching cache / persist / unpersist / cacheTable / uncacheTable SQL sql / table / saveAsTable / registerTempTable / tables 6 ML Lib glm / kmeans / Naïve Bayes Survival regression DataFrame API select / subset / groupBy / head / avg / column / dim UDF functionality (since 2.0) spark.lapply / dapply / gapply / dapplyCollect http://spark.apache.org/docs/latest/api/R/

- 7. Overview of SparkR API :: Session Spark session is your interface to Spark functionality in R o SparkR DataFrames are implemented on top of SparkSQL tables o All DataFrame operations go through a SQL optimizer (catalyst) o Since 2.0 sqlContext is wrapped in a new object called SparkR Session. 7 > spark <- sparkR.session() All SparkR functions work if you pass them a session or will assume an existing session.

- 9. Moving data between R and JVM 9 R JVM R Backend SparkR::collect() SparkR::createDataFrame()

- 10. Overview of SparkR API :: DataFrame API SparkR DataFrame behaves similar to R data.frames > sparkDF$newCol <- sparkDF$col + 1 > subsetDF <- sparkDF[, c(“date”, “type”)] > recentData <- subset(sparkDF$date == “2015-10-24”) > firstRow <- sparkDF[[1, ]] > names(subsetDF) <- c(“Date”, “Type”) > dim(recentData) > head(count(group_by(subsetDF, “Date”))) 10

- 11. Overview of SparkR API :: SQL You can register a DataFrame as a table and query it in SQL > logs <- read.df(“data/logs”, source = “json”) > registerTempTable(df, “logsTable”) > errorsByCode <- sql(“select count(*) as num, type from logsTable where type == “error” group by code order by date desc”) > reviewsDF <- tableToDF(“reviewsTable”) > registerTempTable(filter(reviewsDF, reviewsDF$rating == 5), “fiveStars”) 11

- 12. Moving between languages 12 R Scala Spark df <- read.df(...) wiki <- filter(df, ...) registerTempTable(wiki, “wiki”) val wiki = table(“wiki”) val parsed = wiki.map { Row(_, _, text: String, _, _) =>text.split(‘ ’) } val model = Kmeans.train(parsed)

- 13. Overview of SparkR API IO read.df / write.df / createDataFrame / collect Caching cache / persist / unpersist / cacheTable / uncacheTable SQL sql / table / saveAsTable / registerTempTable / tables 13 ML Lib glm / kmeans / Naïve Bayes Survival regression DataFrame API select / subset / groupBy / head / avg / column / dim UDF functionality (since 2.0) spark.lapply / dapply / gapply / dapplyCollect http://spark.apache.org/docs/latest/api/R/

- 14. SparkR UDF API 14 spark.lapply Runs a function over a list of elements spark.lapply() dapply Applies a function to each partition of a SparkDataFrame dapply() dapplyCollect() gapply Applies a function to each group within a SparkDataFrame gapply() gapplyCollect()

- 15. spark.lapply 15 Simplest SparkR UDF pattern For each element of a list: 1. Sends the function to an R worker 2. Executes the function 3. Returns the result of all workers as a list to R driver spark.lapply(1:100, function(x) { runBootstrap(x) }

- 16. spark.lapply control flow 16 R Worker JVM R Worker JVM R Worker JVM R Driver JVM 1. Serialize R closure 3. Transfer serialized closure over the network 5. De-serialize closure 4. Transfer over local socket 6. Serialize result 2. Transfer over local socket 7. Transfer over local socket 9. Transfer over local socket 10. Deserialize result 8. Transfer serialized closure over the network

- 17. dapply 17 For each partition of a Spark DataFrame 1. collects each partition as an R data.frame 2. sends the R function to the R worker 3. executes the function dapply(sparkDF, func, schema) combines results as DataFrame with schema dapplyCollect(sparkDF, func) combines results as R data.frame

- 18. dapply control & data flow 18 R Worker JVM R Worker JVM R Worker JVM R Driver JVM local socket cluster network local socket input data ser/de transfer result data ser/de transfer

- 19. dapplyCollect control & data flow 19 R Worker JVM R Worker JVM R Worker JVM R Driver JVM local socket cluster network local socket input data ser/de transfer result transfer result deser

- 20. gapply 20 Groups a Spark DataFrame on one or more columns 1. collects each group as an R data.frame 2. sends the R function to the R worker 3. executes the function gapply(sparkDF, cols, func, schema) combines results as DataFrame with schema gapplyCollect(sparkDF, cols, func) combines results as R data.frame

- 21. gapply control & data flow 21 R Worker JVM R Worker JVM R Worker JVM R Driver JVM local socket cluster network local socket input data ser/de transfer result data ser/de transfer data shuffle

- 22. dapply vs. gapply 22 gapply dapply signature gapply(df, cols, func, schema) gapply(gdf, func, schema) dapply(df, func, schema) user function signature function(key, data) function(data) data partition controlled by grouping not controlled

- 23. Parallelizing data • Do not use spark.lapply() to distribute large data sets • Do not pack data in the closure • Watch for skew in data – Are partitions evenly sized? • Auxiliary data – Can be joined with input DataFrame – Can be distributed to all the workers using FileSystem 23

- 24. Packages on workers • SparkR closure capture does not include packages • You need to import packages on each worker inside your function • If not installed install packages on workers out-of-band • spark.lapply() can be used to install packages 24

- 25. Debugging user code 1. Verify your code on the Driver 2. Interactively execute the code on the cluster – When R worker fails, Spark Driver throws exception with the R error text 3. Inspect details of failure reason of failed job in spark UI 4. Inspect stdout/stderror of workers 25

- 26. Demo 26 Notebooks available at: • hSp://bit.ly/2krYMwC • hSp://bit.ly/2ltLVKs

- 27. Thank you!