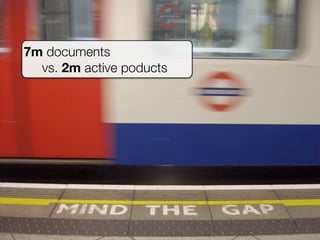

This document summarizes architectural lessons learned from refactoring a Solr-based API application at Shopping24. Key strategies discussed include sharding the index to improve performance as the data size grew from 500k to 7m documents, using caching and separate Solr cores to optimize access for different clients, and automating infrastructure to dynamically scale hardware resources. The document stresses examining business requirements to simplify queries and indexes by removing unsearchable data and duplicating frequently accessed content.