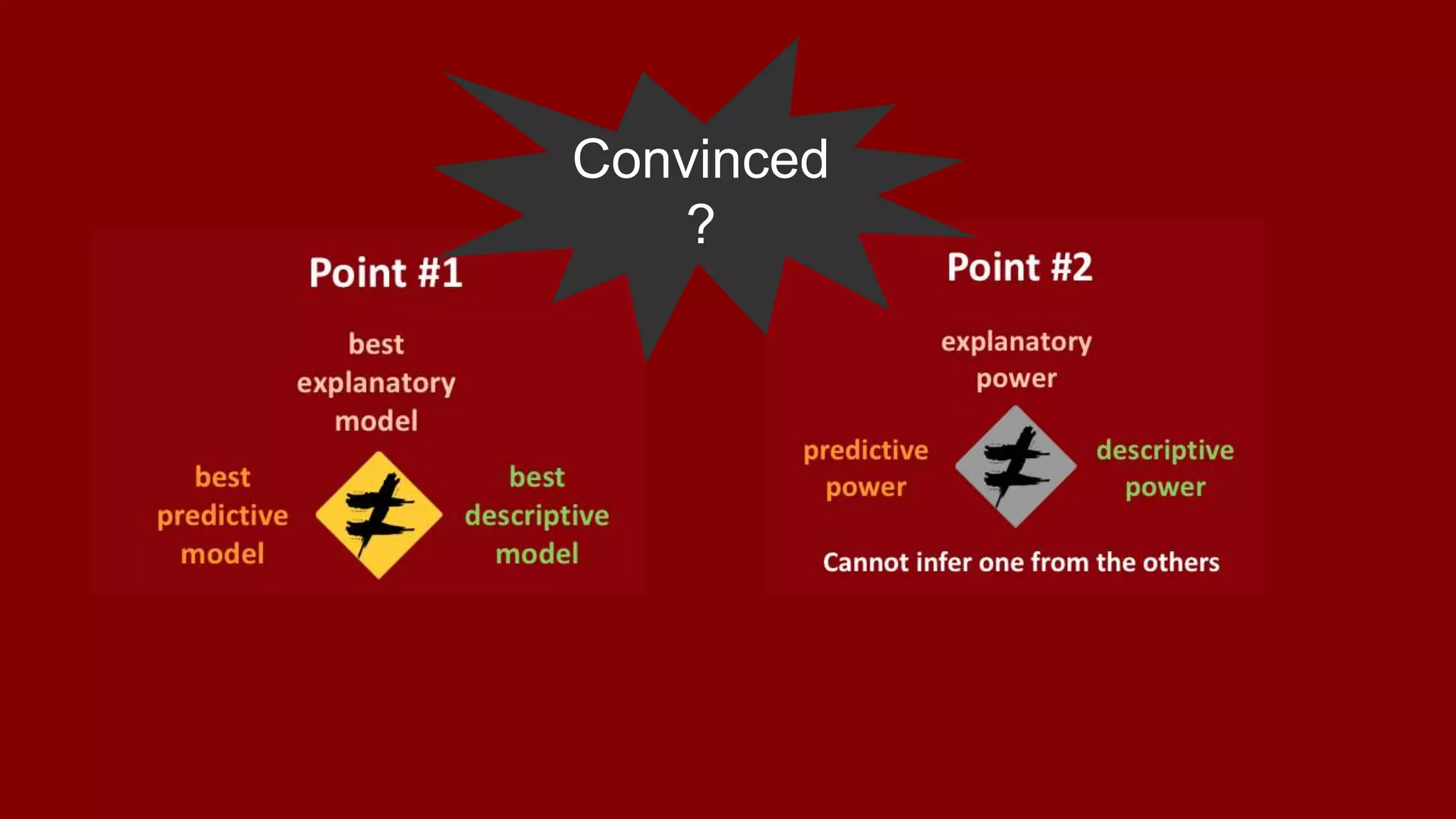

The document discusses the distinctions between explanatory, predictive, and descriptive statistical modeling, emphasizing their different purposes and methodologies. It addresses misconceptions in academia and industry about the interchangeability of these modeling types and highlights the importance of understanding their unique roles in research and practice. Additionally, it touches on the impact of predictive analytics in scientific research, suggesting that predictive power from explanatory models can provide value beyond mere utility.

![Predict ≠ Explain

+ ?

“we tried to benefit from an

extensive set of attributes

describing each of the movies in

the dataset. Those attributes

certainly carry a significant signal

and can explain some of the user

behavior. However… they could

not help at all for improving the

[predictive] accuracy.”

Bell et al., 2008](https://image.slidesharecdn.com/explainpredicttesthailandjan2018-180110152601/75/Statistical-Modeling-in-3D-Explaining-Predicting-Describing-27-2048.jpg)

![Predict ≠ Describe

Election Polls

“There is a subtle, but important, difference between

reflecting current public sentiment and predicting the

results of an election. Surveys have focused largely on

the former… [as opposed to] survey based prediction

models [that are] focused entirely on analysis and

projection”

Kenett, Pfefferman & Steinberg (2017) “Election Polls – A Survey, A Critique,

and Proposals”, Annual Rev of Stat & its Applications](https://image.slidesharecdn.com/explainpredicttesthailandjan2018-180110152601/75/Statistical-Modeling-in-3D-Explaining-Predicting-Describing-28-2048.jpg)